Operations | Monitoring | ITSM | DevOps | Cloud

August 2022

NiCE Active 365 Management Pack 4.1 released

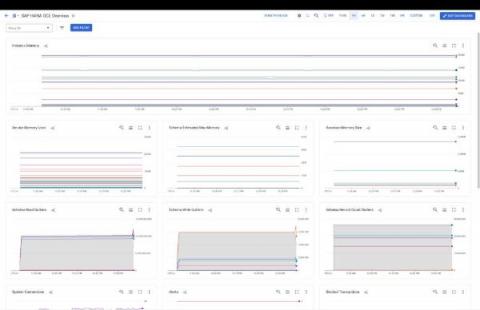

Microsoft 365 services help companies worldwide improve business and revenue by providing best in class digital workspace experience. The NiCE Active 365 Management Pack complements this by advanced M365 monitoring such as full Teams Call analysis integrated into Microsoft SCOM. Advanced monitoring and analytics help you reveal unwanted micro-events influencing the health and performance of the system and its users.

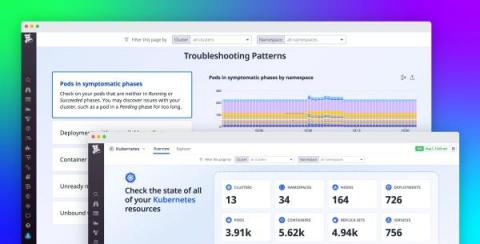

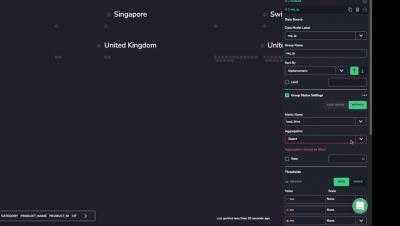

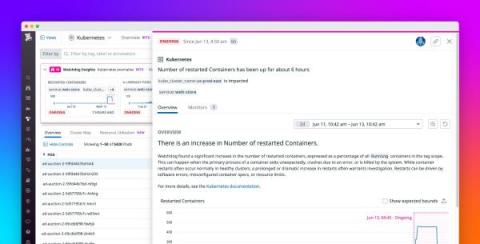

Equip any user to monitor Kubernetes with the Overview Page

Many organizations use Kubernetes to orchestrate their containerized applications. But because Kubernetes is complex, application developers may take some time to ramp up on the intricacies of monitoring a Kubernetes environment. This means that teams often need to create internal documentation and offer hands-on training to bridge the knowledge gap.

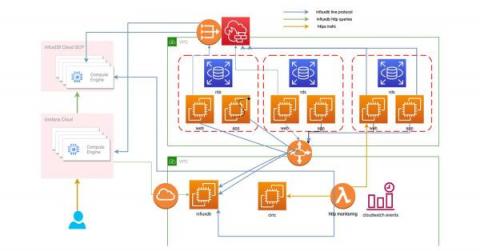

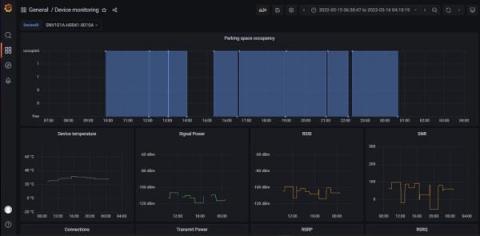

How Ekopak Manages Water Treatment Data with InfluxDB

A wide variety of industrial processes rely on water, and before it can be used, it needs to be treated to remove dissolved substances. Minerals have to be filtered out so they don’t form scales on equipment as water is heated and cooled, and bacteria needs to be removed in cases involving human health. Ekopak is a Belgian company working to make water treatment more sustainable by using less water and energy where possible.

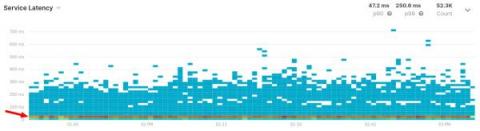

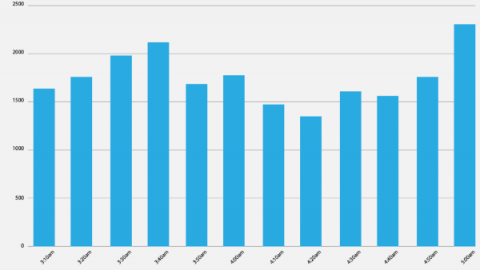

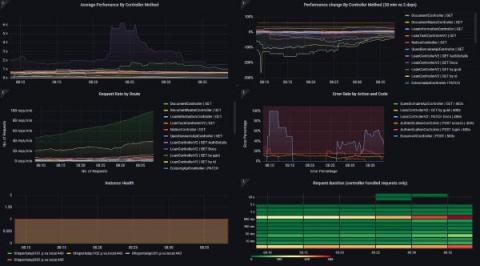

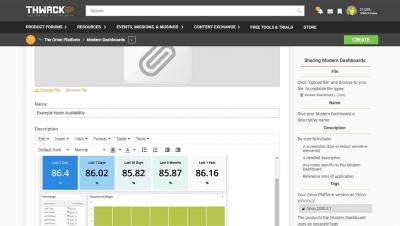

Monitor Ruby Application Performance with Magic Dashboards

Application teams must understand what their customer experience is like. This is true not only from a general perspective (in terms of usability and responsiveness) but also on a day-to-day, minute-by-minute basis. In particular, when you work with distributed systems, errors are inevitable. Site traffic fluctuates throughout the day, and any one of a system’s dependencies could also encounter an issue at any time.

Debunking 4 Cybersecurity Myths About Machine Learning

Machine learning has infiltrated the world of security tooling over the last five years. That’s part of a broader shift in the overall software market, where seemingly every product is claiming to have some level of machine learning. You almost have to if you want your product to be considered a modern software solution. This is particularly true in the security industry, where snake oil salesmen are very pervasive and vendors typically aren’t asked to vigorously defend their claims.

Observability: You Can't Buy It, You Must Build It!

In Part 1 of this series, we talked about the origins of observability and why you need it. In this blog (Part 2), we will cover exactly what observability is, what it isn’t, and how to get started. Before we can dive into how to approach observability, let’s get one thing clear: You can’t buy a one-size-fits-all observability solution.

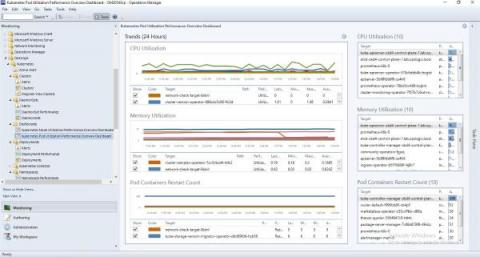

Kubernetes Management Pack for SCOM Released by OpsLogix

Using SLOs to Increase Software Reliability

4 Key Reasons Service Virtualization is a Must for Agile Teams

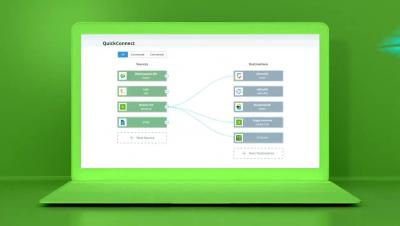

Service virtualization is not new. In fact, the concept and technology were established 20 years ago. At its core, service virtualization offers the ability to simulate behavior, data, and performance characteristics of applications and services. Through service virtualization, teams can ensure they have an on-demand environment to support their testing needs.

11 Best Redis Monitoring Tools [2022 Review]

Redis is an open-sourced, BSD 3 licensed, highly efficient in-memory data store that can be easily used as a distributed, in-memory key-value store, cache, or message broker. It is known for being extremely fast, reliable, and supporting a wide variety of data structures, making it a very versatile tool widely adopted across the industry. Redis was architectured with speed in mind and is designed in a way that it keeps all the data in memory.

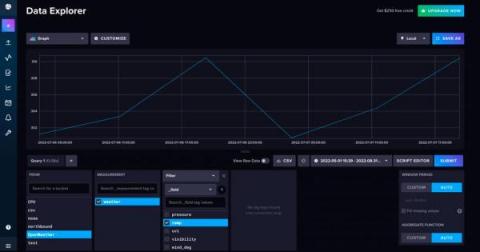

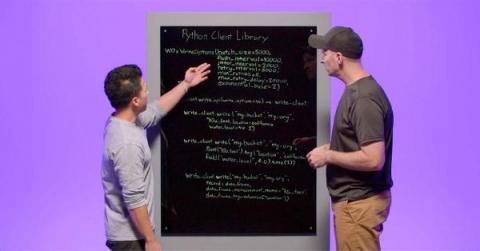

Obtaining and Storing Time Series Data with Python

In this tutorial we’ll learn how to use Python to get time series data from the OpenWeatherMap API and convert it to a Pandas DataFrame. Next we’ll write that data to InfluxDB, a time-series data platform, with the InfluxDB Python Client. We’ll convert the JSON response from our API call to a Pandas DataFrame because I find that that’s the easiest way to write data to InfluxDB.

Harnessing the "Power of Data" with Catchpoint

For the past 25 years, I’ve been monitoring networks, applications, services, basically anything digital on a quest to understand “when is it safe to cross the road”! We all know, just blindly crossing a road is EXTREMELY dangerous!

Best Remote System Monitoring Solutions in 2022

Companies must effectively monitor their assets and networks in today's competitive setting, get the most significant result, and react swiftly to problems. However, such a situation is unusual with companies that continue to run in a traditional, isolated setting. These companies frequently don't have precise asset performance tracking procedures.

OpenTelemetry Logs, OpenTelemetry Go, and the Road Ahead

We’ve got a lot of OpenTelemetry-flavored honey to send your way, ranging from OpenTelemetry SDK distribution updates to protocol support. We now support OpenTelemetry logs, released a new SDK distribution for OpenTelemetry Go, and have some updates around OpenTelemetry + Honeycomb to share. Let’s see what all the buzz is about this time! 🐝🐝

How adding Kubernetes label selectors caused an outage in Grafana Cloud Logs - and how we resolved it

Hello, I’m Callum. I work on Grafana Loki, including the hosted Grafana Cloud Logs offering. Grafana Loki is a distributed multi-tenant system for storing log data — ingestion, querying, all that fun stuff. It also powers Grafana Cloud Logs.

Observability-as-Code with AppDynamics: CloudFormation and AWS Elastic Container Service (ECS)

In this technical deep-dive, we'll show you how to leverage AppDynamics to observe the state of the Amazon AWS Elastic Container Service.

Simplify Cloud Data Onboarding with the NEW Data Manager

How to Save Costs With IT Monitoring

Why Parkview Health Chose Zenoss

The Enemy of Efficiency is the Lack of Automation

Microservice Application Monitoring Tips and Tricks

Microservices have grown to become one of the most optimal alternatives to monoliths. However, just building your app and releasing it to the public isn’t everything. Monitoring microservices is as important as building and releasing them. You need to maintain it to resolve issues that may occur and also introduce new features from time to time.

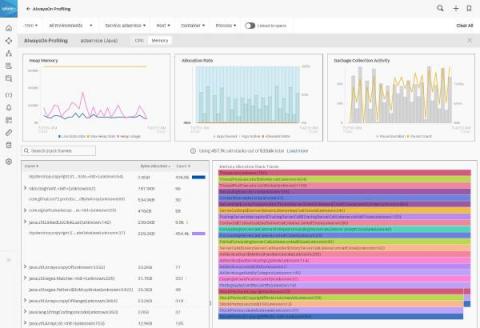

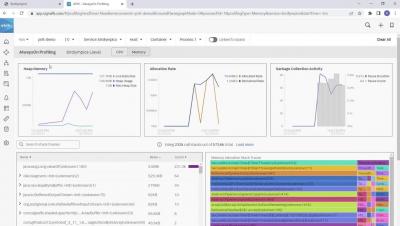

Splunk APM Expands Code Profiling Capabilities with Several New GAs

We’re excited to share new Splunk capabilities to help measure how code performance impacts your services. Splunk APM’s AlwaysOn Profiling now supports.NET and Node.js applications for CPU profiling, and Java applications for memory profiling. AlwaysOn Profiling gives app developers and service owners code level visibility into resource bottlenecks by continuously profiling service performance, at minimal overhead.

Cloud Governance vs. Cloud Management

Measuring Cloud Instance Costs for FinOps

Achieving cost savings is one of the main drivers for cloud adoption. But for most companies, controlling cloud spend is much more challenging than anticipated. In a recent survey, 94% of IT decision makers report they are overspending in the cloud. Our own survey on cloud costs revealed 90% of executives say better cloud cost management and cost reduction is a top priority.

10 Ways MSPs Can Punch Above Their Weight

Maintaining High-Velocity Feature Development, Without Sacrificing Quality

As in any high-growth environment, expanding your suite of products and capabilities can contribute to a growing backlog of errors, and challenges prioritizing them… a scenario not lost on the team at Airtable, a connected apps platform that more than 300k organizations, including 80% of the Fortune 100, rely on to connect their teams, data, and workflows. To support organizations like Amazon and IBM, Airtable ships new features and updates through multiple deployments a week.

Digital Dexterity Enhances Remote and Hybrid Work

The leap to remote work happened nearly overnight with the pandemic, and it’s clear that hybrid work and digital dexterity is the way of the future. As a result of this shift in the way we work, companies have invested in new technologies to adapt to the growing remote world, with 69% of companies planning to increase their investment in digital tools even more in 2022. Additionally, over 90% of businesses strive to implement hybrid work environments.

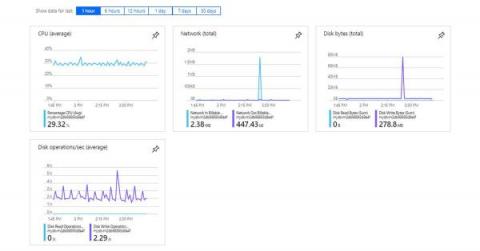

How to monitor Couchbase with Google Cloud Ops

How to get base URL in ASP.NET Core

You typically don't and shouldn't need to know where a web app is deployed. At least not from within the code of the web app itself. I keep seeing questions related to this, though. There are a range of reasons why this can still be relevant like if you want to generate and output an absolute URL in an MVC controller or Razor page. Here's a blog post about how to get the base URL in ASP.NET Core. Let's rewind a bit before we start looking into the code. All websites are deployed somewhere.

Icinga for Windows v1.10.0 - The Next Level

After some drawbacks we are very happy today to finally release Icinga for Windows v1.10.0! The past weeks we have spent a lot of time to polish this release, test many different aspects and optimize the user experience in general. Please make sure to read the upgrading docs carefully, before upgrading to v1.10.0. Otherwise, your installation might not work as expected, or Icinga for Windows might not load anymore.

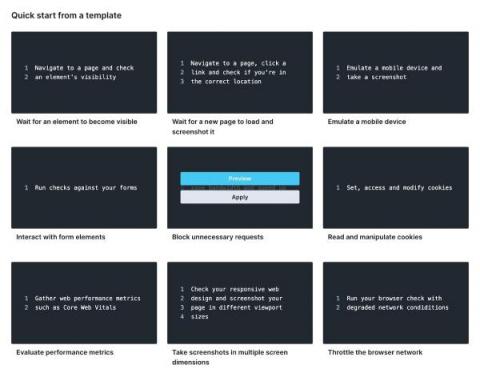

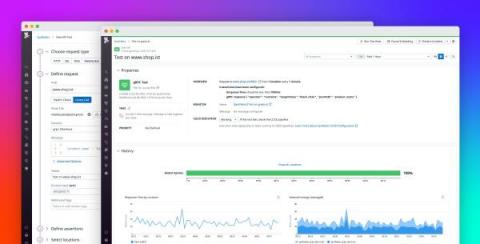

Fire up new Browser checks with our new templates

Let’s admit it: end-to-end testing is a technical challenge. How do you make features testable? What testing framework should you use? When should you run your test suite? There are so many things to learn and consider. At Checkly, we want to ease end-to-end monitoring so that you can focus on shipping excellent software instead of figuring out how you monitor and test it. But before getting into our latest feature addition, let me answer the above questions.

Under 10 minutes: Logs and metrics from Kubernetes to Coralogix

Rackspace IT Tool Consolidation with Zenoss

Out Of Office Monitoring Tips for the End of Summer

Employees are returning from vacation, the weather is (finally) cooling down and summer is coming to a close. It can feel a bit overwhelming returning to work and getting back into the swing of things. When it comes to website monitoring, there are simple steps you can take to make sure your transition is as smooth as possible. Take advantage of a few ounces of pre-vacation prevention to save on pounds of post-holiday cure.

Why ITOps should care about monitoring application performance

Introducing Unified Observability Platform by VMware Aria Operations for Applications

At VMware, we are on a mission to build a comprehensive, extensible, and intelligent monitoring and observability platform to help businesses run seamlessly. Over the past few years, we have evolved our platform to deliver invaluable end-to-end observability across applications and infrastructure.

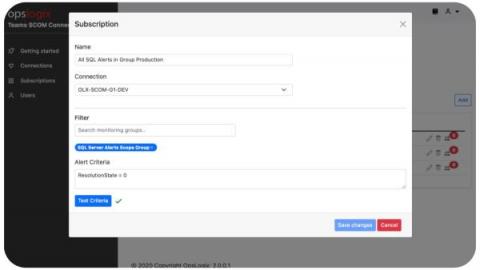

OpsLogix SCOM Connector for Microsoft Teams: Major New Updates Released!

How to: Deadman Check to Alert on Service Outage

Whether you’re using InfluxDB to record massive amounts of historical stock market data to analyze the current economic trends or simply to monitor the number of times the lights in your smart home turn on and off to cut down on wasted electricity, a sudden shock or delay in the flow of incoming data can be detrimental to your operation in the majority of scenarios.

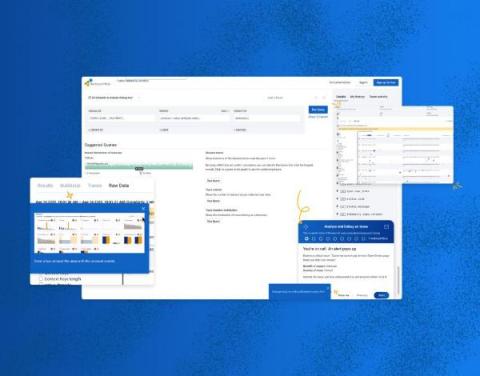

Anomaly Detection and AIOps - Your On-Call Assistant for Intelligent Alerting and Root Cause Analysis

In this blog, we examine how anomaly detection helps by setting up healthy alerts and providing efficient root cause analysis. Anomaly detection, part of AIOps, guides your attention to the places and times where remarkable things occurred. It reduces information overload, thereby speeding up RCA investigation.

FinOps: Measuring Allocatable Cloud Spend

Cloud services are the number one source of unexpected overspending for companies today. As a result, cloud financial management is a major focus for most organizations. But how do you track the success of cloud efficiency? Full allocation of multicloud costs is a critical component for understanding your actual cloud services usage, establishing cloud cost management ownership, and creating accurate budgets and forecasts at the line of business, project, application and even team levels.

What is FSLogix?

FSLogix is a profile management solution used to apply personalization to user sessions for application and desktop virtualization technologies such as Citrix and Microsoft Azure AVD (Azure Virtual Desktop) and enable “roaming profiles”. It used to be common to copy a profile to and from the network when a user signs in and out of a remote environment. Because user profiles can often be large, sign in and sign out times often became unacceptable.

How to Drive IT Efficiencies While Reducing Spend

How Does Unified Monitoring Reduce MTTR?

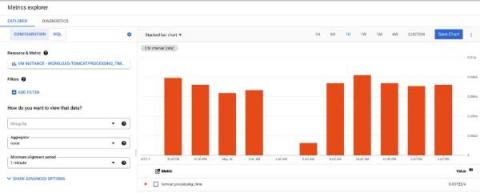

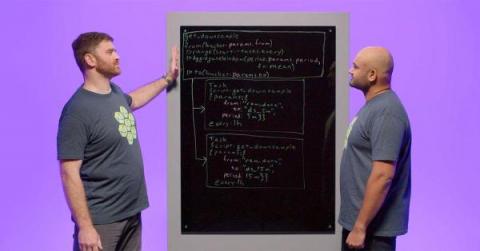

Aggregations and Chains: Performance Measurement in Cribl Stream Pipelines

In this post, we’ll discuss two functions in the Cribl Stream arsenal: The Aggregations function, which allows you to perform stats and metrics collection in flight, and the Chain function allows you to call one Pipeline from within another. The event flow will continue when the Chained Pipeline returns. To demonstrate their use, we’ll answer this question: How long did it take for Cribl to process events using your pipeline?

Enterprise vs. SMB IT: What's the Difference?

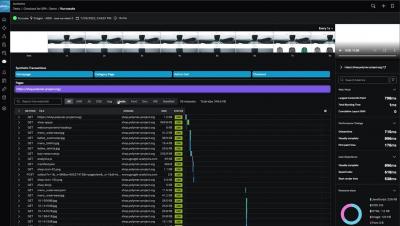

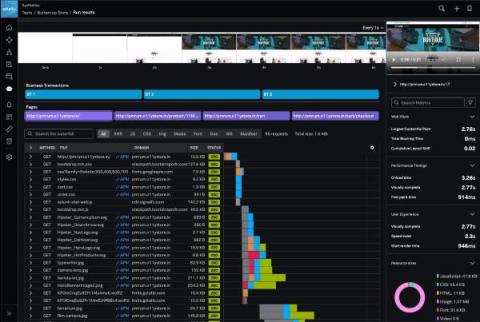

Splunk Synthetic Monitoring in Splunk Observability Cloud - Product Demo

Using Splunk Observability Cloud to Monitor Splunk RUM

As a principal engineer on the Splunk Real User Monitoring (RUM) team who is responsible for measuring and monitoring our service-level agreements (SLAs) and service-level objectives (SLOs), I depend on observability to measure, visualize and troubleshoot our services. Our key SLA is to guarantee that our services are available and accessible 99.9% of the time.

Product Roadmap Template

A product roadmap helps you say goodbye to scattered information across emails, meetings, and messages. And a typical roadmap includes.

Business Templates for Organizations of All Sizes

In need of expert-tested, easy-to-use business templates to help you save time and improve productivity? Well, here’s a bundle of templates for business planning, financial planning and budgeting, inventory management, and more. For example.

Middleware 101

In computer science, systems are typically divided into two categories: software and hardware. However, there is an additional layer in between, referred to as middleware, which is a software pipeline—an operation, a process, or an application between the operating system and the end user. This article aims to define middleware and reflect on its necessity, as well as address controversies about when and where it applies.

Monitor your Microsoft Azure VMs featuring Ampere Altra Arm-based CPUs with Datadog

As organizations continue to expand their cloud footprint, managing costs without risking application performance is a priority. Because of this, Arm processors have become popular for their efficient, cost-effective processing power. Microsoft Azure’s new series of Azure Virtual Machines are powered by Ampere Altra Arm-based processors, which provide excellent price performance for scale-out and cloud-native workloads.

Observability: A Concept That Goes Back to the Founding of the Internet

With its market size reaching more than $2 billion in 2020, you’d think that a universal definition of the term observability would have emerged by now. But it turns out that a clear definition of a term or industry isn’t necessarily a prerequisite for the rapid growth of its market size — just ask everyone at your next dinner party to define blockchain for you and see how many different answers you get!

A Quick Guide to Observability vs APM vs Monitoring

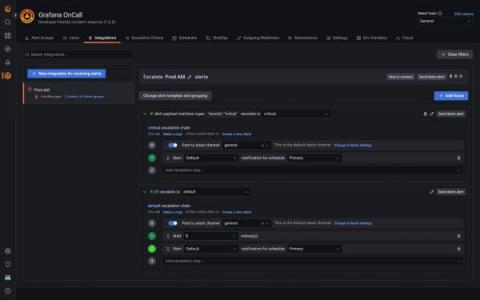

Get started with Grafana OnCall and Terraform

Managing on-call schedules and escalation chains, especially across many teams, can get cumbersome and error prone. This can be especially difficult without as-code workflows. Here on the Grafana OnCall team, we’re focused on making Grafana OnCall as easy to use as possible. We want to make it easier to reduce errors with your on-call schedules, create schedule and escalation templates quickly, and fit on-call management into your existing as-code patterns.

Dashboards Can Be More Than Just Expensive TV

Someone once described dashboards to me as “expensive TV for software engineers.” At first, I stood there quietly shocked—dashboards had informed many root cause analyses (RCAs) in my life as a developer.

Ignoring Health Check Endpoints from Metrics and Traces

Your liveness and readiness HTTP endpoints can generate a bunch of useless Spans and can mess with your latency metrics. On this post we’ll learn how to configure Kamon Telemetry to completely ignore these enpoints for good.

Elastic Observability helps monitor your Azure workloads on the new Arm-based VMs

Microsoft Azure’s recently launched new Azure Virtual Machines (VMs) feature the Ampere Altra Arm-based processor. These new VMs are engineered to efficiently run horizontally scalable workloads such as web servers, application servers, and open source databases. They deliver excellent price-performance and represent an important addition to Microsoft Azure's portfolio of instance types.

Sentry Spend Allocation

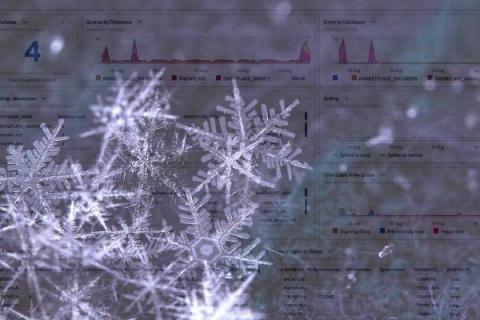

Snowflake DB: Observing a Snowflake From Cloud to Chart

You’ve probably heard something like this before: “It’s a managed service! We don’t need to worry about anything!” But when it comes to your production workloads, database monitoring is imperative. With the new Snowflake Dashboards and Detectors in the Splunk Observability Content Contributors repository you can start seeing the details of individual Snowflakes.

IT monitoring reduces the workload of retailers by about 30%

Food retailers reduce the workload accumulated by their IT areas by almost 30% thanks to monitoring. Controlling data and extensive information from the whole company, by controlling, supervising and ordering everything through the same system, allows to reduce the times of action in the face of possible errors and failures, improves resource management and organization and increases the effectiveness of the business activity. In addition, monitoring saves costs.

Monitoring outages in your third-party services with LogSnag

LogSnag is the new kid on the block, but we bet that it will become relevant in this space soon. Today we will show you how to monitor outages and get alerts for your third-party services within LogSnag. What is LogSnag? Here's the intro, but you can learn more at LogSnag official website.

Creating Homebrew Formulas with GoReleaser

The SRE's Quick Guide to Kubectl Logs

Logs are key to monitoring the performance of your applications. Kubernetes offers a command line tool for interacting with the control plane of a Kubernetes cluster called Kubectl. This tool allows debugging, monitoring, and, most importantly, logging capabilities. There are many great tools for SREs. However, Kubernetes supports Site Reliability Engineering principles through its capacity to standardize the definition, architecture, and orchestration of containerized applications.

Monitor Akamai Datastream 2 with Datadog

Akamai is one of the world’s largest CDN solution providers, helping companies greatly accelerate the secure delivery of content to their users all across the globe. Akamai provides this content delivery through its Intelligent Edge Platform, which is made up of hundreds of thousands of edge servers distributed around the planet.

Jaeger distributed tracing - complete guide

Network Blind Spots Are Endangering Your Business

The Top 15 Distributed Tracing Tools (Open Source & More)

As distributed environments become more complex, users often use distributed tracing tools to improve the visibility of issues evident within their traces. Throughout this post, we will examine some of the best open-source and other generally popular distributed tracing tools available today.

The unreasonable effectiveness of shipping every day

Webinar Highlights: Improving Clinical Data Accuracy - How to Streamline a Data Pipeline Using Node.js, AWS and InfluxDB

Given the global health crises the world has faced over the last few years, the need for expeditious but accurate medical trials has never been more important. The faster clinical trial data is validated, the faster medicines get approved and treatments become available. Pinnacle 21’s customers are driving forces behind creating life-saving treatments.

Level Up Your DevOps Strategy with Intelligent Alerting

Data Value Gap - Data Observability and Data Fabric - Missing Piece of AI/AIOps

How to choose the right hyperscaler for your SAP solution

If you’re in the market for a new SAP HyperScaler, you may have been wondering what exactly you should look for. Maybe you’re wondering which model is best for your needs. Don’t worry, we’ve got you covered. We’ve done all the research and found the best SAP HyperScaler on the market. You’ll also find a buying guide to help you navigate the process and find the right SAP HyperScale server for your needs.

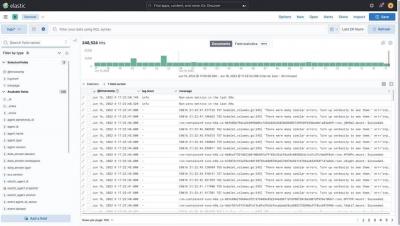

Finding insights and taking action in Discover

Getting Started with Sematext Cloud

Schneider Electric consolidates monitoring tools by 83% with LogicMonitor

To Dynamically Sample or Not to Dynamically Sample | Snack of the Week

ManageEngine OpManager MSP is now available in 9 more languages!

ManageEngine OpManager MSP is a reliable solution solely developed to help managed service providers with monitoring client networks and servers exhaustively. It has a wide range of out-of-the box features to help MSPs in their multi-client network monitoring journey. The ManageEngine team works hard to make products that cater to the needs of MSPs, which is why OpManager MSP undergoes regular improvements, because even the most feature-rich tools need tune-ups every now and then.

How to Perform Geolocation Testing to Ensure Your Website Works Globally

So, you have launched a website intending to reach a worldwide audience? If you're running a business, this could be the first step to growing your brand. But is your website really ready to go global? After all, just because your website works for a user in the United States doesn't mean it will be accessible to a user in Japan. For one, not everyone speaks the same language. Does your website offer translation for users visiting from different global locations?

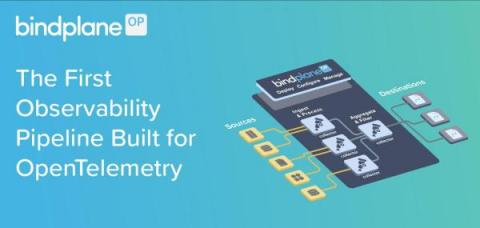

Configuring an OpenTelemetry Collector to connect to BindPlane OP

What is Kubernetes CrashLoopBackOff? And how to fix it

CrashLoopBackOff is a Kubernetes state representing a restart loop that is happening in a Pod: a container in the Pod is started, but crashes and is then restarted, over and over again. Kubernetes will wait an increasing back-off time between restarts to give you a chance to fix the error. As such, CrashLoopBackOff is not an error on itself, but indicates that there’s an error happening that prevents a Pod from starting properly.

An Introduction to PromQL: How to Write Simple Queries

PromQL is a flexible language designed to make it easy for users to perform ad-hoc queries against their data. By default, Prometheus indexes all of the fields in each metric except for source and target, which are not indexed by default. Prometheus is an open-source tool that lets you monitor Kubernetes clusters and applications. It collects data from monitoring targets by scraping metrics HTTP endpoints.

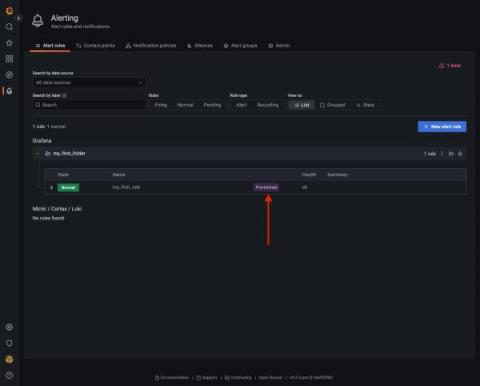

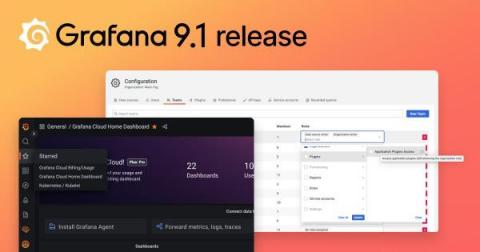

New in Grafana Alerting: File provisioning

We are happy to announce that file provisioning for Grafana Alerting has arrived in Grafana 9.1. This feature enables you to configure your whole alerting stack using files on disk, as you may already do with data sources or dashboards. The Terraform Grafana provider has also been updated to allow the provisioning of Grafana Alerting resources.

Intro to OEE

Efficient manufacturing is important for saving companies time, money, and energy. Making decisions based on data can improve efficiency, but there’s a lot of data to sort through. Manufacturing equipment contains many sensors, especially in the IIoT space. Overall Equipment Effectiveness (OEE) was first described by Seiichi Nakajima in the mid-twentieth century as part of his Total Productive Maintenance (TPM) method.

ROI Benefits of APM Tools

Software applications have become crucial for business growth and success in today's world. However, as businesses become increasingly competitive, the necessity to provide top-notch software applications is also increasing. Additionally, as organisations gravitate towards developing extensive, feature-rich applications, they are witnessing an increase in software complexity – that can often cause things to get out of hand very quickly.

Simplify Cloud Data Onboarding with the NEW Data Manager

This Month in Datadog: Episode 14

Monitoring - Best Practices for Alerting - New Whitepaper!

When evaluating a monitoring product, it is essential you fully understand its alerting capabilities. Alerting is a responsive action triggered by a change in conditions within the system being monitored. Typically, an alert can be defined by a condition to trigger the alert and an action defining what that alert should do when the trigger condition occurs.

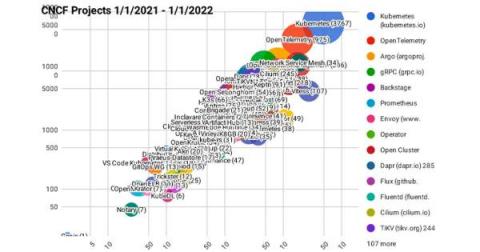

Building a Cost-Effective Full Observability Solution Around Open APIs and CNCF Projects

Monitor 70,000 Managed Resources in a Single Pane of Glass

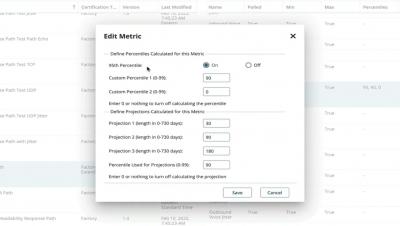

Experience-Driven NetOps: What It Is and Why It Matters

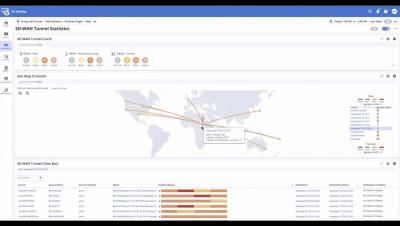

Recent events have changed the world forever. For network operations (NetOps) teams, it means there’s no going back to the way things used to be. Virtually overnight, teams had to adapt to work-from-anywhere (WFA) models. The move to SaaS, cloud, and SD-WAN continue to accelerate—and in the process fundamentally alter the nature of network environments.

How to always match the correct DOM elements with Playwright's strict mode

Get Alerted to mission-critical issues directly in Slack

Monitor your Edgecast CDN with Datadog

Edgecast is a global network platform that provides a content delivery network (CDN) and other solutions for edge computing, application security, and over-the-top video streaming. Using Edgecast’s JavaScript-based CDN, teams can improve web performance by caching static and dynamic content with low latency and minimal overhead.

How much does RPKI ROV reduce the propagation of invalid routes?

Earlier this year, Job Snijders and I published an analysis that estimated the proportion of internet traffic destined for BGP routes with ROAs. The conclusion was that the majority of internet traffic goes to routes covered by ROAs and are thus eligible for the protection that RPKI ROV offers. However, ROAs alone are useless if only a few networks are rejecting invalid routes.

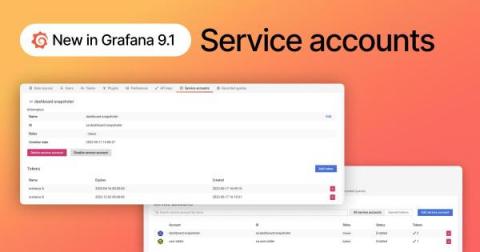

New in Grafana 9.1: Service accounts are now GA

With the Grafana 8.5 release, we introduced the concept of service accounts. Now with the Grafana 9.1 release, we’re making service accounts generally available. This is a project that came out of technical necessity, but it has given us the opportunity to reflect on API tokens and machine-to-machine interaction across Grafana Labs.

How Zenoss Is Key to Efficient Operations in 4G and 5G

Telegraf Tips from InfluxDB University Experts

Telegraf is a very powerful open source plugin-based agent that gathers data from stacks, sensors, and systems and sends it to a database. It collects data from an input and sends it to an output, and gives you the option to transform data with aggregators and processors before it reaches its endpoint.

Authors' Cut-Actionable SLOs Based on What Matters Most

SLOs—or Service Level Objectives—can be pretty powerful. They provide a safety net that helps teams identify and fix issues before they reach unacceptable levels and degrade the user experience. But SLOs can also be intimidating. Here’s how a lot of teams feel about them: We know we want SLOs, we’re not sure how to really use them, and we don’t know how to debug SLO-based alerts. Don’t worry, we’ve got your answer—observability!

How to use IPAM? Part Two: VLAN and supernetting

Do you want to learn more about the IPAM management tool? Visit the first part of the video https://youtu.be/-ByuHaNnrDs

Do you want to test the power of all-in-one monitoring? Request a free trial of the Enterprise version on our website: https://bit.ly/2O9hoRf

What's the Perfect IT Support Staff Ratio?

Building Auvik Into Your MSP's SOP (Video)

How to Generate Client Referrals as an MSP

Observing and monitoring Azure Cosmos DB with AppDynamics

As organizations increasingly adopt cloud-first strategies, it’s important to have data stores that have internet-level scalability to power these cloud native applications. For applications running on Azure, the logical choice is Azure Cosmos DB.

Welcome to the Experience-Driven NOC

At Broadcom Software, we strive to build the most scalable operational software in the market. We work to ensure that our network monitoring software can track how constant network changes affect user experiences. As a global provider of networking equipment, we understand that there will always be changes happening on today’s enterprise networks, especially the internet. That’s why we build and refine our monitoring software to align with constant change.

Site Reliability Engineering, Site Reliability Engineers and SRE Practices: State of Adoption

Site reliability engineering (SRE) is what you get when you treat operations as if it’s a software problem. The mission of an SRE practice is to protect, provide for and progress the software and systems offered and managed by an organization with an ever-watchful eye on their availability, latency, performance and capacity.1.

Search all apps - understand the impact of an error across your entire tech stack

Find the root cause faster with Datadog and Zebrium

When troubleshooting an incident, DevOps teams often get bogged down searching for errors and unexpected events in an ever-increasing volume of logs. The painstaking nature of this work can result in teams struggling to resolve issues before new incidents appear, potentially leading to an incident backlog, longer MTTR, and a degraded end-user experience.

How the right monitoring tools can bolster operational resilience in finance

The financial services industry has been under increasing pressure during the past several years to view operational resilience and their risk management postures as being symbiotic in the wake of rising operational incidents and increasingly frequent security threats.

Goats on the Road: Getting More Value From Observability Data

The best part of my job is talking with prospects and customers about their logging and data practices while explaining how Cribl focuses on getting more value from observability data. I love to talk about everything they are doing and hope to accomplish so I can get a sense of the end state. That is vital to developing solutions that provide overall value across the enterprise and not just a narrow tactical win with limited impact.

Get the Most Out of Serverless for Fleet Management Apps

You’ve probably seen Rush Hour, a logic puzzle where you have to slide cars and trucks out of the way to steer the red car towards the exit. In real life, when your customers are responsible for tracking hundreds or thousands of data points from dozens of valuable, mission-critical sensors, you’re tracking engine speed, network signal level, distance from the RF, and more—and not just through traffic but across continents.

What Does SASE Mean (for VPN)?

What is Azure Advisor?

Azure Advisor analyzes your configurations and usage telemetry and offers personalized, actionable recommendations to help you optimize your Azure resources for reliability, security, operational excellence, performance, and cost. Azure Advisor is a free service and can be accessed via the GUI on the Azure portal where recommendations are collated and can be manually examined. Azure Advisor makes recommendations for potential improvements in several areas, including.

What's new in Sysdig - August 2022

Welcome to another month of What’s New in Sysdig in 2022! I’m Joshua Ma, a Customer Solutions Engineer based out of sunny Los Angeles. I joined the Customer Success team at Sysdig five months ago. After having my first taste of K8s, containers, and Falco at the North America KubeCon/CloudNativeCon in 2019, I haven’t looked back since!

Sysdig launches Partner Technical Accreditation Program

In the quest for business transformation and digital modernization, organizations have rapidly adopted devops frameworks, microservice architectures, serverless technologies, and containerized infrastructures. However, they have realized that legacy tools cannot adequately address the newer security and monitoring challenges associated with modernization. Sysdig’s mission is to make every cloud platform secure and reliable from source to run.

Announcing Native Collectors: Bringing Native Data Collection to InfluxDB Cloud

Streaming time series data from brokers and services that are on-premises or in the cloud to a cloud-based database is a resource-intensive process requiring third-party software and heavy customizations. Today we’re announcing InfluxDB Native Collectors to make it easy for developers to collect, process, and analyze data by subscribing directly to supported message brokers.

InfluxData Brings Native Data Collection to InfluxDB

SAN FRANCISCO — August 23, 2022 – InfluxData, creator of the leading time series platform InfluxDB, today announced new serverless capabilities to expedite time series data collection, processing, and storage in InfluxDB Cloud. InfluxDB Native Collectors enable developers building with InfluxDB Cloud to subscribe to, process, transform, and store real-time data from messaging and other public and private brokers and queues with a click of a button.

Yotpo Testimonial

Application Observability, The Next Step in Application Performance Monitoring

Why Do You Need Smarter Alerts?

The way organizations process logs have changed over the past decade. From random files, scattered amongst a handful of virtual machines, to JSON documents effortlessly streamed into platforms. Metrics, too, have seen great strides, as providers expose detailed measurements of every aspect of their system. Traces, too, have become increasingly sophisticated and can now highlight even the most precise details about interactions between our services. But alerts have remained stationary.

5 FinTech Log Analytics Challenges Equifax Solved with ChaosSearch

Introducing Dynamic Sampling

In the monitoring industry there’s a complicated and frustrating conversation that persisted over the years: how do you deal with the enormous volume of data generated by instrumentation? On one side of the aisle, you will find a cohort of vendors and developers telling you that you have to sample data, followed immediately by another group telling you that sampling will ruin the accuracy of incident analysis. They’re both right.

Top 5 Debugging Tips for Kubernetes DaemonSet

Kubernetes is the most popular container orchestration tool for cloud-based web development. According to Statista, more than 50% of organizations used Kubernetes in 2021. This may not surprise you, as the orchestration tool provides some fantastic features to attract developers. DaemonSet is one of the highlighted features of Kubernetes, and it helps developers to improve cluster performance and reliability.

observIQ Announces General Availability of Open Source Observability Solution BindPlane OP

Monitor your gRPC APIs with Datadog Synthetic Monitoring

gRPC is an open-source Remote Procedure Call (RPC) framework developed by Google and released in 2016. Although gRPC is still relatively new, large organizations are adopting it in increasing numbers to build APIs that connect complex microservice meshes that use disparate languages and frameworks. gRPC-based APIs can perform requests up to seven times faster than REST APIs and enable customers to easily implement SSL authentication, load balancing, and tracing via plug-in libraries.

Understanding monitoring and observability

Roaming in the world of cloud technology not only helps you take a glance at the realm of cutting-edge technology but also helps you get familiar with concepts such as monitoring and observability. This article will cover an introduction to monitoring and the need for monitoring applications. From here, we will look at how you can utilize the data received when monitoring an application. This will allow us to understand how the concept of observability fits in with monitoring.

How to monitor Solr with OpenTelemetry

10Web Booster: Speed Up Your WordPress Site with One Tool

When it comes to a website’s performance, we all know the universal rule: speed matters… a lot. Beyond a good user experience, it’s a key factor in what Google is specifically looking—and testing—for. If you need a refresher, here it is, straight from Google: And what exactly does Google consider fast?

Monitoring Unit Tests with OpenTelemetry in .NET

In this post, we’ll look at how you can use OpenTelemetry to monitor your unit tests and send that data to Honeycomb to visualize. It’s important to note that you don’t need to adopt Honeycomb, or even OpenTelemetry, in your production application to get the benefit of tracing. This example uses OpenTelemetry purely in the test project and provides great insights into our customer’s code. We’re going to use xUnit as the runner and framework for our tests.

Autoscaling Elasticsearch/OpenSearch Clusters for Logs: Using a Kubernetes Operator to Scale Up or Down

When we say “logs” we really mean any kind of time-series data: events, social media, you name it. See Jordan Sissel’s definition of time + data. And when we talk about autoscaling, what we really want is a hands-off approach at handling Elasticsearch/OpenSearch clusters. In this post, we’ll show you how to use a Kubernetes Operator to autoscale Elasticsearch clusters, going through the following with just a few commands.

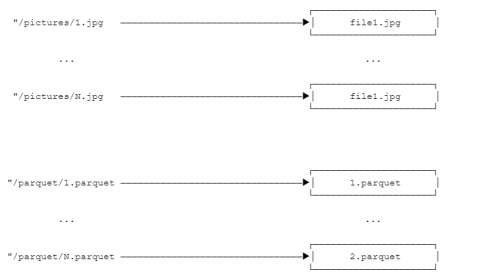

Grafana Tempo 1.5 release: New metrics features with OpenTelemetry, Parquet support, and the path to 2.0

Grafana Tempo 1.5 has been released with a number of new features. In particular, we are excited that this is the first release with experimental support for the new Parquet-based columnar store. Read on to get a high-level overview of all the new changes in Grafana Tempo! If you’re a glutton for punishment, you can also dig into the hairy details of the changelog.

Rust Object Store Donation

Today we are happy to officially announce that InfluxData has donated a generic object store implementation to the Apache Arrow project. Using this crate, the same code can easily interact with AWS S3, Azure Blob Storage, Google Cloud Storage, local files, memory, and more by a simple runtime configuration change. You can find the latest release on crates.io. We expect this will accelerate the pace of innovation within the Rust ecosystem.

Commit Auto Association

Migrating Monoliths to Microservices in Practice

There have been amazing articles on the subjects of migrating from a monolith to a microservice architecture e.g. this is probably one of the better examples. The benefits and drawbacks of the architectures should be pretty clear. I want to talk about something else though: the strategy. We build monoliths since they are easier to get started with. Microservices usually rise out of necessity when our system is already in production.

Why and How to Monitor AWS Elastic Load Balancing

When building systems that need to scale above a certain number of users, we usually can’t stay on one machine. This is where cloud providers like AWS usually come into play. They allow us to rent VMs or containers for small intervals. This way, we can start a few different machines when more traffic hits, and when it goes down later, we can simply turn off our extra capacity and save money. The question is, how does all this traffic get to our new machines? AWS Elastic Load Balancing!

What is on-call, and why is it important?

Your company has a product/service that needs to be up and running 24/7 or serving customers worldwide? Heads up, you might need an on-call team. In this article, we’ll start with the basics of what is on-call and why it is important.

How are RED metrics calculated in SigNoz | SigNoz Office Hours

How are RED metrics calculated in SigNoz?

Modern Observability and Digital Transformation

For most businesses, effective digital transformation is a key strategic objective, and as computing infrastructure grows in complexity, end-to-end observability has never been more important to this cause. However, the amount of data and dynamic technologies required to keep up with demand only continues to increase, and current tools are not equipped to handle it- with any discrepancies resulting in rising costs and reduced competitiveness.

Why You Shouldn't Use OpenTracing In 2022

OpenTracing was an open-source project developed to provide vendor-neutral APIs and instrumentation for distributed tracing across a variety of environments. As it is often extremely difficult for engineers to see the behaviour of requests when they are working across services in a distributed environment, OpenTracing aimed to provide a solution to heighten observability.

An introduction to OpenTelemetry Metrics

InfluxDB Python Client Library: A Deep Dive into the WriteAPI

InfluxDB is an open-source time series database. Built to handle enormous volumes of time-stamped data produced from IoT devices to enterprise applications. As data sources for InfluxDB can exist in many different situations and scenarios, providing different ways to get data into InfluxDB is essential. The InfluxDB client libraries are language-specific packages that integrate with the InfluxDB v2 API. These libraries give users a powerful method of sending, querying, and managing InfluxDB.

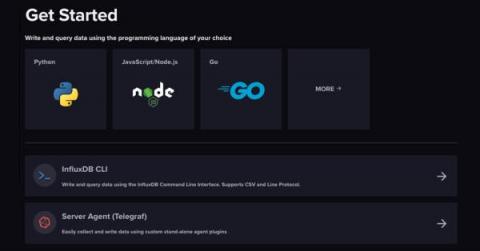

Product Update - CLI Onboarding Wizard Now Available

We love to write and ship code to help developers bring their ideas and projects to life. That’s why we’re constantly working on improving our product to meet developers where they are, to ensure their happiness, and accelerate Time to Awesome. This week, we are covering a featured product release that we think will save you time and effort when onboarding to time series and InfluxDB.

The Ultimate OpenTelemetry Guide for Developers

OpenTelemetry is a free and open-source software initiative with the objective of supplying software developers with the means to create distributed systems. OpenTelemetry was developed by engineers at Google, and developers have the ability to utilize it to create a standard foundation for the construction of distributed systems. The goal is to enable developers to write code once and then deploy it in any location of their choosing.

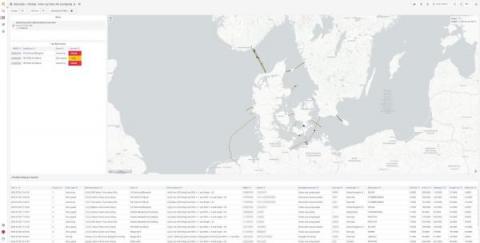

How Denmark's Energinet uses Grafana Enterprise to monitor underwater energy cables - and do detective work

If an energy cable running through the waters surrounding Denmark gets damaged by a passing vessel, does it make a sound? Yes. . . and it’s the ping of a Grafana alert at the offices of Energinet, an independent public enterprise owned by the Danish Ministry of Climate, Energy, and Utilities.

IT Asset Disposition: An MSP Opportunity That's Anything But Trash

ManageEngine Integrates with Sectigo® to Automate Certificate Lifecycle Management

What is OpenTelemetry?

OpenTelemetry is a collection of tools and APIs for collecting, processing, and exporting telemetry data from software. It is used to instrument applications for performance monitoring, logging, tracking, tracing, and other observability purposes. What is Telemetry? The word is derived from the Greek “tele” meaning “remote,” and metron meaning “measure.” So, it’s the collection of metrics and their automatic to a receiver for monitoring.

An Engineering Manager's Bill of Rights (and Responsibilities)

In 2018, Honeycomb co-founder & CTO Charity Majors wrote a blog post titled, “An Engineer’s Bill of Rights (and Responsibilities).” We’ve recently updated and reposted it.

How to Manage a Network: 10 Essential Steps

Getting Started With Syslog in Auvik

Choosing the Best Virtual Desktop Infrastructure (VDI) Technology for Your Enterprise

Virtual desktop infrastructure (VDI) is a technology that refers to the use of virtual machines to provide and manage virtual desktops. Users access virtual desktops from their laptops, desktops, thin clients, or mobile devices from anywhere. Virtual desktops are hosted in a data center, on servers, and all the necessary processing is done on the server that hosts the virtual desktops.

Mezmo Named to Inc. 5000's List of Fastest Growing Companies in the Nation

Inc. is shining a light on Mezmo as one of the fastest growing companies in the nation. We are truly honored to be featured alongside innovative brands like Sentry and Calendly, who are building the future of tech. Our position on the list at number 695 reflects our 900% growth in revenue and 300% growth in the size of our team from 2018 to 2021.

That Rogers Outage is Going to be More Expensive Than You Think

On July 8 of 2022, the Canadian telecom company Rogers Communications suffered a major outage that impacted most of Canada for almost two days. This wasn’t completely unprecedented (they’d had an outage in 2021 that impacted their wireless servers for several hours) but the breadth and severity of this one is going to end up costing them far, far more than it seems at first glance.

A preview of our upcoming redesign

Earlier this year, we announced that one of our goals for this year is to bring the UI of Oh Dear to the next level. Behind the scenes, our team is working hard on a complete rewrite of our marketing website and app. We're currently targeting the end of September timeframe to launch our redesign. In this blog post, we'd like to give you a preview of the redesign.

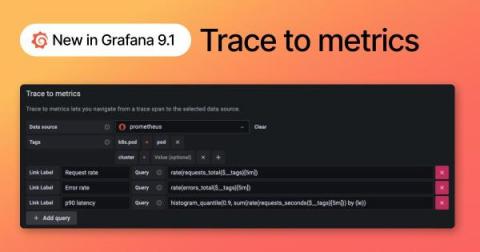

New in Grafana 9.1: Trace to metrics allows users to navigate from a trace span to a selected data source

Traces, logs, and metrics provide inherently different views into a system, which is why correlating between them is important. With features like exemplar support, trace to logs, and span references, you can quickly jump between most telemetry signals in Grafana. With the release of Grafana 9.1, we’re improving Grafana’s ability to correlate different signals by adding the functionality to link between traces and metrics.

How to Supercharge Your Website Monitoring in 5 Minutes or Less

I’m a recent entrant to the Website Monitoring game, but there is one thing I realized straight away: A Monitoring tool is only as good as it’s configured to be. Website monitoring is at its best when it’s reliable, informative, and efficient. When it gives you the information you need, when you need it, and the peace of mind to say “if I’m not being alerted, I know it’s still working.”

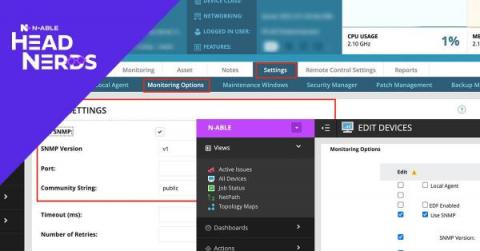

Using N-central for Server Hardware Monitoring

While it is fair to say that in recent years we’ve seen a shift to servers being deployed in the cloud through Microsoft Azure or AWS, I’m sure if you’re reading this today you still have a large percentage of physical servers under your management, including Hyper-V and ESXi hosts. N-central’s ESXi monitoring should automatically detect and monitor the hardware in these boxes, but what about the rest?

What's the deal with Playwright's web-first assertions?

Send a Test Span Through an OpenTelemetry Collector

The scenario: you want to see distributed traces, maybe for your web app. You’ve set up an OpenTelemetry collector to receive OTLP traces in JSON over HTTP, and send those to Honeycomb (how to do that is another post, and we’ll link it here when it’s up).

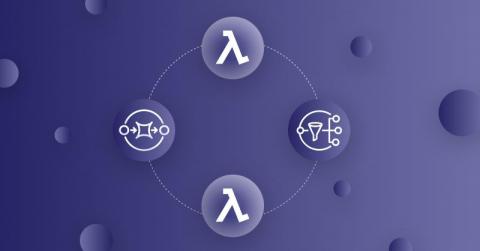

Shine Some Light on Your SNS to SQS to Lambda Stack

The combination of SNS to SQS to Lambda is a common sight in serverless applications on AWS. Perhaps triggered by messages from an API function. This architecture is great for improving UX by offloading slow, asynchronous tasks so the API can stay responsive. It presents an interesting challenge for observability, however. Because observability tools are not able to trace invocations through this combination end-to-end. In X-Ray, for example, the trace would stop at SNS.

Goliath Technologies Achieves Record First-Half 2022 Growth

Philadelphia, PA – August 10, 2022 – Goliath Technologies, a leader in end-user experience monitoring and troubleshooting software for hybrid cloud environments, announced today that they achieved record revenue growth during the first half of the calendar year 2022, up more than 45% YOY 2021.

Observability vs. Monitoring: Discovering the Difference

Successful IT operations management (ITOM) of today’s complex enterprise infrastructure requires collecting a lot of data and making informed, automated decisions based on the insights contained in that data.

Concurrent Users vs. Total Users Explained

High-Scale Monitoring: Lessons from Broadcom's DX UIM Deployment

While many people know us from our semiconductor and infrastructure software solutions, few have visibility into what goes on behind the scenes to support Broadcom’s global business. Within the Broadcom Software division, the Broadcom Global Technology Organization (GTO) is responsible for managing an extensive IT infrastructure, one that spans 18 data centers, 100 sites, and 400 R&D labs.

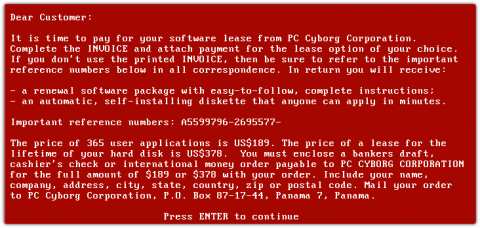

Battle the Ransomware Scourge with Deep Network Insight

Ransomware is the gift that keeps on giving. Old as it is (33 years) ransomware is constantly morphing into new exploits. The reason is simple. Ransomware works and too often cybercriminals walk away with bags of money (or piles of Bitcoin, anyway). “Following the World Health Organization's AIDS conference in 1989, Joseph L. Popp, a Harvard-educated biologist, mailed 20,000 floppy disks to event attendees.

How to Troubleshoot Intermittent Internet Connection

Dealing with an intermittent Internet connection is very frustrating. Does your Internet connection keep disconnecting and reconnecting when you’re watching your favourite Netflix show or chatting with your colleagues on Zoom? In this article, we’re teaching you how to troubleshoot intermittent Internet issues with Network Monitoring.

Stack Trace Line Numbers for Unity Events

In 2018 we launched the Sentry Unity SDK, but at the time, we couldn’t crack how to display stack trace line numbers for C# exceptions with IL2CPP scripting backend. And until a recent release of Unity, we thought it wasn’t possible. But here at Sentry we often do the impossible… or at least the improbable. Like adding features to our JavaScript SDK while making it smaller at the same time.

SaaS Monitoring Best Practices

Top 4 challenges of Windows service monitoring that every network admin needs to know

Monitor your Dataflow pipelines with Datadog

Dataflow is a fully managed stream and batch processing service from Google Cloud that offers fast and simplified development for data-processing pipelines written using Apache Beam. Dataflow’s serverless approach removes the need to provision or manage the servers that run your applications, letting you focus on programming instead of managing server clusters. Dataflow also has a number of features that enable you to connect to different services.

Networking Automation Software: Pros & Cons

Use actual data in Sketch for more realistic mockups

This time I want to show a feature that’s not obvious at first sight, but can save a lot of time, when you’re intending to create UI mockups with realistic data.

How does Core Web Vitals impact SEO?

Google announced a new set of metrics known as “Core Web Vitals” which will be used as ranking factors in 2021. The announcement is part of Google’s ongoing effort to make the web more user-friendly and to help site owners improve the experience for their visitors. This is big news for site owners and SEO's who are always looking for ways to improve their site’s ranking on Google.

AWS US-EAST-1 Outage... Again

Coralogix DataMap Overview

Honeycomb Play: Test Drive Honeycomb Without Signup or Setup

Honeycomb Play is an interactive sandbox that lets users explore Honeycomb’s data-enriched UI through a guided scenario. The hands-on experience takes a deep dive into how Honeycomb enables you to identify issues, assess their impact, and diagnose their causes for remediation. There is no requirement to sign up—simply dive in and get started right away!

Zero Trust is NOT enough...

The current trend to move to Zero Trust security architectures using the mantra “Never Trust, Always Verify” is a move that Teneo has long supported. The simple fact is that the traditional castle and moat approach to security built on VPNs is not fit for purpose in the modern world with hybrid users and infrastructures.

Five tips for developing an observability mindset

In the race to create more sophisticated apps, microservices and scalable cloud environments, monitoring is not enough. As environments gain complexity, an observability mindset is paramount in transforming data into mission-critical insights.

Pros and Cons of Horizontal vs. Vertical Scaling

Getting Started With Azure Database for PostgreSQL

New in Grafana 9.1: Share your Grafana dashboard with anyone via public dashboards

We’re excited to announce the launch of a new feature we’ve been working on in Grafana 9.1: public dashboards 🎉. The public dashboards feature will allow you to share your Grafana dashboard with anyone, even if they’re not part of your Grafana organization. Historically, the only way that someone could share a dashboard externally was taking a one-time snapshot 📸, or disabling all authorization for their Grafana instance 😬.

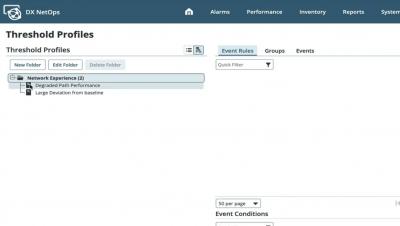

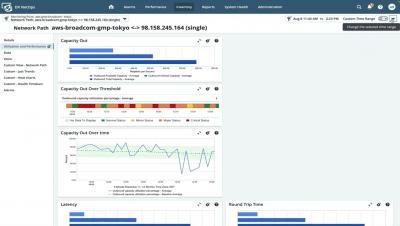

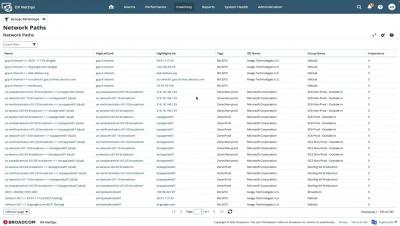

Tame the Internet with DX NetOps 22.2 Network Monitoring Software

DX NetOps 22.2 optimizes network operations with industry-leading visibility, scale and modern network coverage beyond the network edge to quickly and easily isolate end-user experience impact of network performance issues. Recent research revealed that 67% of companies cite internet and cloud network paths as monitoring blind spots. Furthermore, 71% of companies say that adoption of new network technologies is delayed by inadequate network monitoring software.

Troubleshooting AWS EC2 Slowness

Among the 200+ fully features services that Amazon Web Services (AWS) offers, Elastic Compute Cloud (EC2) is the most popular. In the recent eG Innovations and DevOps Institute survey of 900+ IT professionals, cloud instances were the most commonly used cloud service, with 63% usage among respondents.

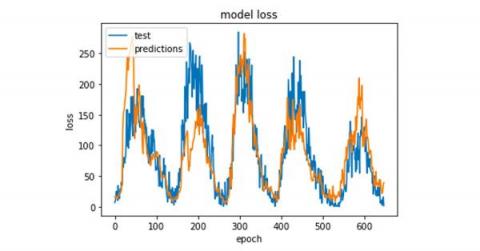

Time Series Forecasting With TensorFlow and InfluxDB

This article was originally published in The New Stack and is reposted here with permission. You may be familiar with live examples of machine learning (ML) and deep learning (DL) technologies, like face recognition, optical character recognition OCR, the Python language translator, and natural language search (NLS). But now, DL and ML are working toward predicting things like the stock market, weather and credit fraud with astounding accuracy.

InfluxDB's Strengths and Use Cases Applied in Data Science

This article was written by Shane from Infosys. Infosys is a global IT Leader, headquartered in India, with over 200,000 employees and a focus on digital transformation, AI/ML, and Analytics. Our organization faces challenges when working with data to assist with proactive anomaly detection, triaging incidents to accommodate for data and volume growth, and maintaining high availability and SLA’s for a near 100% uptime.

You've instrumented performance...now what?

Are Your Engineers Gonna Need A Bigger Boat?

If you asked your engineering team how well they can handle all of the security and observability data they’re managing, would you get a resounding “Yeah boss, we’re good to go!” in response? Possible, but unlikely. Chances are they feel like they’re stuck on a boat that’s taking on water, spending their day using tiny buckets to scoop some of it out, with no way to plug any of the leaks.

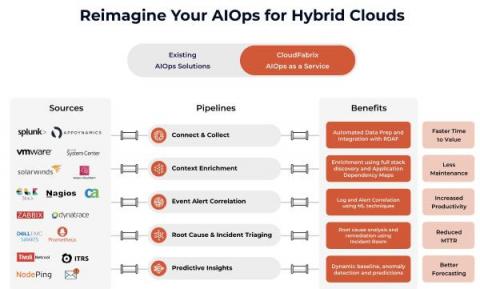

AIOps: Hype vs. Reality

What is AIOps? How does an AIOps platform help your observability practice? AIOps platforms analyze telemetry and events, and identify meaningful patterns that provide insights to support proactive responses. AIOps platforms have five characteristics:1 The above is Gartner’s definition and is part of the Gartner® “Market Guide for AIOps Platforms.” The Gartner definition is also aligned with our view.

Moving from an IT and Security Data Admin to an Observability Engineer

Make Your Hybrid Work Model Successful with Digital Experience Monitoring

According to a new study, the hybrid work model reduces attrition by a third. As the pandemic shifted work to remote, with some companies going fully remote work for over two years, many organizations are looking to continue to allow hybrid work environments. In 2022, more than 90% of midsize companies plan to implement a hybrid work structure. One of our customers practices this model and is always interested to know what new features can enhance the digital experience of their distributed workforce.

Introducing OpUtils' IP Request tool

Are multiple IT operators accessing, utilizing, or managing your network address space? If so, then you might have noticed that one of the time-consuming network management tasks you are undertaking regularly, is allocating IP addresses to the IT operators. This is an inevitable task since, as your network scales with new physical components or technology implementations, your operators require new IPs to enable network connectivity.

AIOps Feature Scape: How you can Accelerate AIOps Data Integrations with Insane New Robotic Data Automation Fabric (RDAF)

Enable hyperautomation with Avantra

Three NuGet packages to improve exceptions in .NET/C#

We love exceptions. Not in the oh-no-my-website-crashed kind of way, but all of the possibilities provided by exceptions and a good exception handling strategy. In this post, I'll introduce you to three different NuGet packages that will help you when dealing with exceptions in C#. .NET comes with a set of exceptions as part of the C# language. You've probably tried creating your own exceptions too by extending System.ApplicationException or similar.

Grafana 9.1 release: New Grafana panels, RBAC for plugins, public dashboards, and more!

Grafana 9.1 is here! Get Grafana 9.1 We’ve made a number of improvements to Grafana’s usability, data visualization, and security. For a full list of new features and capabilities, check out our What’s New in Grafana 9.1 documentation. You can get started with Grafana in minutes with Grafana Cloud. We have a generous free forever tier as well as plans to suit every use case — sign up for free now. Here are some of the highlights in Grafana 9.1.

The Three-Month Fix: How AbbVie Kept Their VDI Users Up and Running

The complexity of today’s workplace technology means that all of our environments are incredibly unique. Two organizations may use the same platforms and applications, but the tactics we use to implement these tools are all unique to our own goals and business needs. But all of us who work in IT and engineering can agree: our companies’ success hinges on our ability to keep our environments running smoothly. I’m a senior engineer at the pharmaceutical company AbbVie.

Top API Metrics for Different Teams That You Should Monitor

Building and utilizing modern applications now essentially requires APIs. They are a crucial component of every company's automated workflow, and as more customers depend on your APIs to power their applications, the demand for them to be trustworthy is growing. Your business will suffer if its performance, availability, or health degrades, thus proactive API monitoring is essential to ensure its dependability. We'll go through the most important API metrics in this article.

Sustainable Technology: Can Clouds Save The Planet?

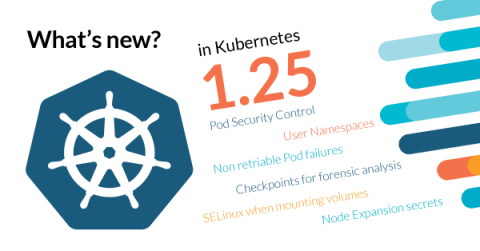

Kubernetes 1.25 - What's new?

Kubernetes 1.25 is about to be released, and it comes packed with novelties! Where do we begin? This release brings 40 enhancements, on par with the 46 in Kubernetes 1.24 and 45 in Kubernetes 1.23. Of those 46 enhancements, 13 are graduating to Stable, 10 are existing features that keep improving, 15 are completely new, and two are deprecated features.

Welcome To The Experience-Driven NOC: Track Network Path Deviation From Normal Performance

Welcome to the Experience-Driven NOC: Network Path Performance Metrics

Django Improvements - Part 3: Frontend Optimizations

In the last 2 parts of this series around improving performance in your Django applications, we focused on database and code optimizations. In part 3, we will focus on ways to improve the frontend speed of our Django applications by using the following.

Unity Debugging: Tips and Tricks

Debugging is a frequently performed task not just for general software developers but also for game developers. During a debugging process of a game, most issues can be identified by simulating a code walkthrough.

Centralizing Log Data to Solve Tool Proliferation Chaos

As companies evolve and grow, so do the number of applications, databases, devices, cloud locations, and users. Often, this comes from teams adding tools instead of replacing them. As security teams solve individual problems, this tool adoption leads to disorganization, digital chaos, data silos, and information overload. Even worse, it means organizations have no way to correlate data confidently. By centralizing log data, you can overcome the data silos that tool proliferation creates.

Welcome to the Experience-Driven NOC: Network Path Change Alarms and Drill Down Context Pages

Welcome to the Experience-Driven NOC: Configure DX NetOps to Collect AppNeta Experience Metrics

Advanced Debugging and Monitoring for Serverless Backends

Serverless backends have different monitoring challenges when compared with traditional applications, mostly due to the distributed and proprietary nature of serverless. Making monitoring and debugging efficient for serverless requires a unique set of tools and techniques. In this article, we’ll discuss the challenges of debugging serverless backends and how to utilize third party tools to improve the monitoring process.

What's Missing From Almost Every Alerting Solution in 2022?

Alerting has been a fundamental part of operations strategy for the past decade. An entire industry is built around delivering valuable, actionable alerts to engineers and customers as quickly as possible. We will explore what’s missing from your alerts and how Coralogix Flow Alerts solve a fundamental problem in the observability industry.

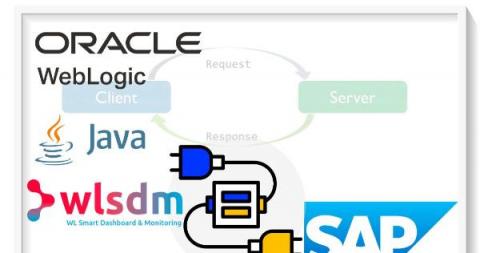

How to Monitor SAP System Connection Health and Create Alert Mechanism?

As a Middleware Administrator, you may need to monitor the status of SAP due to a potential exception that you cannot predict. In order to do this, you may need to connect to the SAP system somehow. In this blog post, we’re going to show you how to monitor SAP through to Oracle WebLogic and Java aspects. You are able to connect to the SAP system using the WebLogic SAP Resource Adapter.

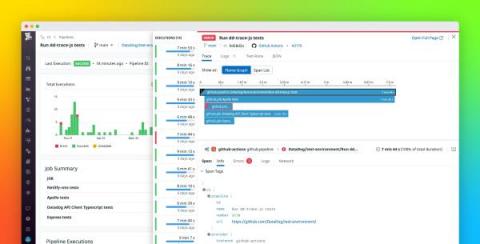

Quick Bytes - Execution Tags

Lumigo for Colorcast: A Game of Metrics

Applying synthetic monitoring across virtual desktop environments

Monitoring the Impossible

5 best practices for optimizing IP address management

Ever discovered an IP issue only after an end user reported it? If yes, you are not alone! Many network admins without a proactive monitoring solution in place have to constantly deal with recurring IP issues such as IP conflicts and subnet overutilization. As simple as it sounds, IP address management can be a tricky endeavor without the right strategy and management tools. Managing hundreds of IPs across multiple subnets and supernets can be tedious.

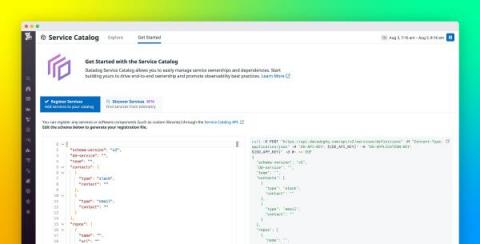

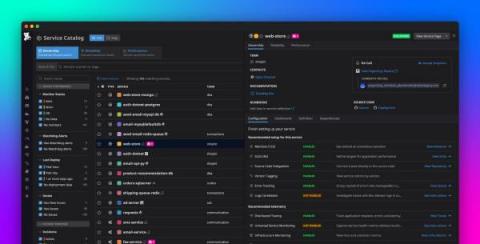

Manage Service Catalog entries efficiently with the Service Definition JSON Schema

The Datadog Service Catalog helps you centralize knowledge about your organization’s services, giving you a single source of truth to improve collaboration, service governance, and incident response. Datadog automatically detects your APM-instrumented services and writes their metadata to a service definition before adding them to the catalog.

Sentry Remix SDK | Sentry Show and Tell

How Veterans United Home Loans uses Grafana Cloud to help military families become homeowners

Veterans United Home Loans is the top VA lender for home buyers in the United States and has been making the dream of homeownership a reality for veterans and military families for more than two decades. A big part of making that dream come true is keeping their services – including both internal applications and a robust digital experience for their borrowers – highly performant.

DX NetOps 22.2 Expands Industry-Leading Observability for Fortinet SD-WAN

Resiliency As the Next Step in the DevOps Transformation

We’ve reached the point in the DevOps transformation where efficiency and automation are no longer the highest objectives. The next step is engineering past automation and towards fully autonomous, self-healing systems. If you aren’t conversing about building this type of resilience into your systems and applications, there’s never been a better time than now to start.

IT Salaries: Trends, Roles, & Locations for 2022-2023

IT roles have never been more in demand and IT salaries have never been higher, according to recent reports and data sources. Whether you are hiring, looking for a career change, or simply work in tech, it’s important to stay up-to-date on the state of employment in the industry. This blog post will review, roundup, and summarize some of the latest trends for IT salaries and demand by role and location (among other variables) to help you get a clear view of the landscape.

State of Cloud Cost Report 2022

Cloud migration efforts continue to grow today as organizations move into a post-pandemic work environment. According to McKinsey & Company, by 2024, most enterprises aspire to have $8 out of every $10 for IT hosting go toward the cloud. In a survey by Morgan Stanley, CIOs say cloud computing will see the highest rate of IT spending growth in 2022.

Monitoring Performance at Moonbeam from Day One

As someone who has seen the devastating effects of poor performance monitoring firsthand, I can attest to the importance of doing it right from the start. If your users are experiencing latency issues and you’re not aware of them, that’s a big problem. At one of my previous jobs, we ended up paying out millions of dollars in SLA violation fees because we didn’t have proper monitoring.

Kubernetes Metrics Server | How to deploy k8s metrics server and use it for monitoring

Welcome to the Experience-Driven NOC

Product Update - Task Management at Scale and Invokable Scripts from the Tasks API

Thanks to Vinay Kumar for being a key contributor to this article. We love to write and ship code to help developers bring their ideas and projects to life. That’s why we’re constantly working on improving our product to meet developers where they are, to ensure their happiness, and accelerate Time to Awesome. This week, we are covering a featured product release that we think will save you time and effort when building with time series, InfluxDB – and specifically – Tasks.

Authors' Cut-Not-So-Distant Early Warning: Making the Move to Observability-Driven Development

This is how the developer story used to go: You do your coding work once, then you ship it to production—only to find out the code (or its dependencies) has security or other vulnerabilities. So, you go back and repeat your work to fix all those issues. But what if that all changed? What if observability were applied before everything was on fire? After all, observability is about understanding systems, which means more than just production.

Why do you need monitoring?

A Guide To Prometheus Metrics

Prometheus is among the leading open-source metric-gathering and alerting platforms for managing metrics from microservices architectures. As Prometheus is such a popular choice in the cloud computing landscape today, we will explain how Prometheus metrics can be leveraged for observability.

Using Grafana and machine learning to analyze microscopy images: Inside Theia Scientific's work

At GrafanaCONline 2022, Theia Scientific President, Managing Member, and Lead Developer Chris Field and Volkov Labs founder and CEO Mikhail Volkov — a Grafana expert — delivered a presentation about using Grafana and machine learning for real-time microscopy image analysis. Real-time microscopy image analysis involves capturing images on a microscope using a digital device such as a PC, iPad, or camera.

Introduction to Network Detection and Response

Network Availability Monitoring Tools

AWS Monitoring Tool

7 Most Important Key Metrics of Server Monitoring Software

Intro to Group Checks

Check out our free trial, no credit card required: https://uptime.com/go/home

#monitoring, #saas, #downtime, #uptime, #nomore404, #outage, #enterprisesbusiness

How Modern Infrastructure is Impacting Application Availability at Scale

The complexity of modern information technology (IT) infrastructures has grown exponentially and changed the way software companies operate and deliver products and services. The days of a single application server and a simple delivery path are long gone. Today’s application development and delivery process can encompass multiple platforms, cloud vendors, code libraries and customer bases.

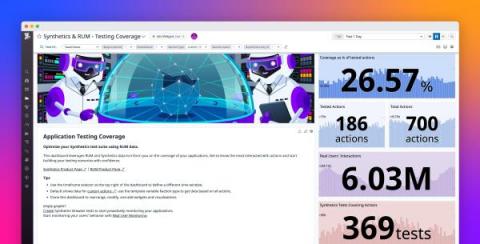

Track your test coverage with Datadog RUM and Synthetic Monitoring

The modern standards of the web demand that user-facing applications be highly usable and satisfying. When deploying frontends, it’s important to implement a comprehensive testing strategy to ensure your customers are getting the best possible user experience. It can be difficult, however, to gauge the effectiveness of your test suite. For instance, all of your tests may be passing, but they might not cover a specific UI element that is crucial to a critical workflow.

"Hey Avantra, refresh my QA systems"

I remember just about three years ago sitting in a companywide meeting in a conference room at the Museum of Modern and Contemporary Art in Lisbon, Portugal (Museu Coleção Berardo, Lisboa). Our CTO, Bernd Engist, was giving us a presentation about some new features we had recently developed on automating the start/stop process of an SAP system.

Coastal municipalities monitor their beaches to measure the environmental impact of tourism

The Spanish tourism sector is preparing for a record summer in which they hope to emulate the summer periods prior to the coronavirus pandemic. The government has already advanced that 90% of the foreign tourism that visited the country in 2019 will be recovered. That year, 84 million foreigners were received, a figure that is expected to be reached this year, taking advantage of the high temperatures and the national tourist offer.

Citrix Monitoring Masterclass with George Spiers - Q&A

Citrix monitoring refers to the ability to monitor Citrix services end-to-end. It includes the ability to monitor user experience – from logon time to application launch time to screen refresh latency so administrators can easily monitor and track if they are meeting their service levels (SLAs).

Seven Tools to Help You Become a Better Serverless Developer

Serverless technologies let us do more with less effort, time and energy. They let us focus on creating user value and let the cloud handle undifferentiated heavy-lifting like scaling and securing the underlying infrastructure that runs our code. Serverless technologies have allowed me to accomplish tasks as a solo engineer that used to take a whole team of engineers to accomplish, and I’m able to complete these tasks in a fraction of the time and cost to my customers.

Ramp Up | Ep 06 | David Beck on Containers | Digital Transformation

Performance Monitoring with API

If you are in a room with 20 engineers and you ask, “explain what an API is to a non-technical person”, you will get 20 different analogies. An API is like the on button to your TV connecting you to a variety of shows and systems, or an API is like a waiter taking your order and serving you from the kitchen. An API is like a library card catalog, or it’s simply a tool that connects you to other tools.

Netdata Release v1.36

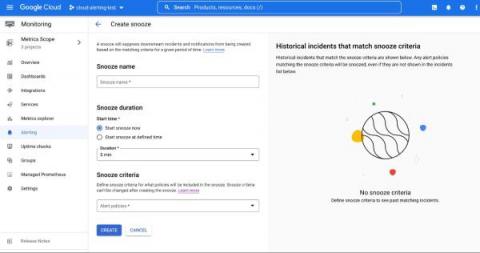

Snooze your alert policies in Cloud Monitoring

Does your development team want to snooze alerts during non-business hours? Or proactively prevent the creation of expected alerts for an upcoming expected maintenance window? Cloud Alerting in Google's Cloud operations suite now supports the ability to snooze alert policies for a given period of time. You can create a Snooze by providing specific alert policies and a time period. During this window, if the alert policy is violated, no incidents or notifications are created.

Major Hosted VoIP Provider, bravad TI, Choose Obkio for End-User Network Monitoring

Learn how Obkio works with technology agency & major hosted VoIP Service Provider, bravad TI, to create a streamlined network monitoring and troubleshooting process to optimize VoIP performance & improve the end user experience.

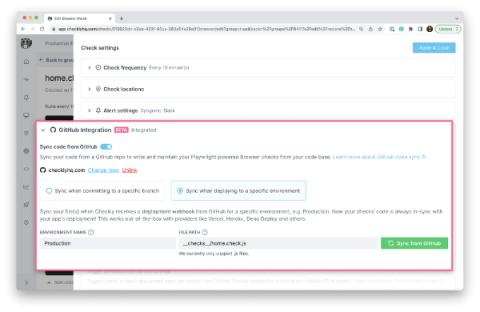

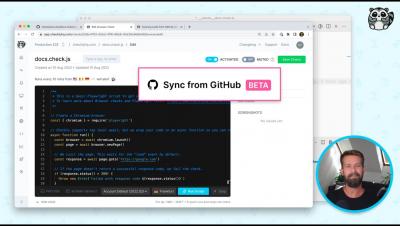

GitHub Browser check synchronization goes into public beta

One of our goals here at Checkly is to make it easier for developers to ship excellent software. But let’s face it, getting features out is only a tiny fraction of the story. Fast-moving development teams also break things. And the more things you build, the more things can go wrong. And trust me, they will. This is where API and end-to-end monitoring helps. Define automated test suites that check all your properties constantly and guarantee that everything’s up and running. All the time.

Grafana usage insights: How to track who is sending what metrics to your stack in Grafana Cloud

We are happy to announce the release of the Grafana usage groups feature in Grafana Cloud. This new feature — which is available in the Grafana Cloud Advanced plan — helps centralized observability teams and administrators track and attribute metrics usage back to groups that exist within a single shared Grafana stack. Ultimately, Grafana usage groups can help with governance and cost control.

What's The Best Employee Monitoring Software - ActivTrak or Insightful?

In the sea that is the SaaS market, there is an abundance of software for employee monitoring. This software, as the name suggests, is used for time tracking, monitoring, project management, and implementing safety measures. Widely used by companies that want to ensure that their employees are actually getting work done while on the clock, but that’s not where the benefits of using these tools end.

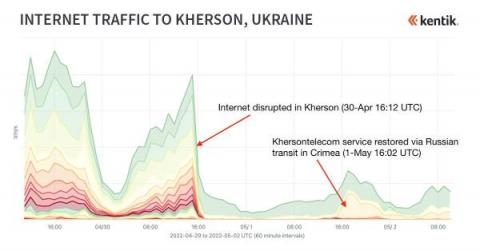

Understanding AS relationships, outage analysis and more Network Operator Confidential gems

The objective of Network Operator Confidential is to share our global internet market insights from recent months. Kentik, and our customers, have access to views and analysis of global internet traffic that no one else can match. In our first Network Operator Confidential webinar, I was joined by Doug Madory, Kentik’s director of internet analysis, and Grant Kirkwood, founder and CTO at Unitas Global.

How Site Uptime Impacts SEO (Hint: It's a REALLY Big Deal)

It is arguably the most important 3-letter acronym on the digital marketing landscape. No, it’s not ROI. It’s SEO. Consider that: Clearly, effective SEO is extremely important. And for many businesses — especially smaller companies that are competing against big, established enterprises — it’s a matter of survival. However, for some decision-makers outside of the digital marketing world, the link between SEO and site uptime is less clear. Let’s fix that.

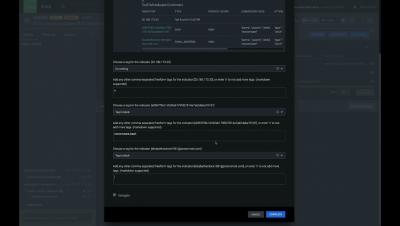

AppDynamics Cloud: Kubernetes Overview

Memory Profiling for Java Applications, a Splunk APM Product Walkthrough

How Cloud Network Monitoring Is Critical To Business Success

A rising number of businesses are adopting and utilizing cloud services and capabilities with remarkable success. But embracing cloud tools and services often brings unexpected changes for business leaders and IT teams, especially because of the way in which cloud adoption has altered how networks are monitored and managed.

One-stop Open Source Observability is now a reality with Log Management in SigNoz - SigNal 15

Alerting: A Key Part of Application Performance Monitoring

In today’s digital world, users expect to have a seamless experience in their day-to-day applications. To achieve such reliability and stability in our application, information about the health and performance of an application has become necessary for developers to gain insights and fix bottlenecks to provide a seamless user experience. One of the best ways to gain such insights into an application is to use a monitoring system.

IT Tool Rationalization and You