|

By Mezmo

At Mezmo, we handle an enormous volume of telemetry data for our customers and ourselves, requiring a robust and efficient search and analytics backend. For years, ElasticSearch served us well, but as our infrastructure grew to a multi-cluster, multi-petabyte scale, we started to see the cracks—rising costs, performance bottlenecks, and scalability concerns. We needed a change, one that would make our system more cost-effective while maintaining speed and reliability.

|

By Mezmo

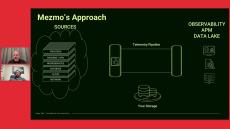

This is an updated version of an earlier blog post to reflect current definitions of a telemetry pipeline and additional capabilities available in Mezmo Our recent blog post about observability pipelines highlighted how they centralize and enable telemetry data actionability. A key benefit of telemetry pipelines is users don't have to compare data sets manually or rely on batch processing to derive insights, which can be done directly while the data is in motion.

|

By Mezmo

There was a lot happening in the world in 2024, but today, I’m going to highlight some product accomplishments we had this year at Mezmo on our Telemetry Pipeline product.

|

By Mezmo

I attended Amazon Web Services re:Invent conference. This is AWS's annual user conference, which takes over most of Las Vegas for a week. There’s a lot to do and take in—customer stories galore, new tech, learning different use cases, and all the walking. But you’re here to hear what I learned, so I’ve broken it down into sections. Enjoy!

|

By Mezmo

Last week, I attended one of the last conferences of the year with team Mezmo: the Gartner IT Infrastructure, Operations & Cloud Strategies Conference in Las Vegas. Not surprisingly, there were over 20 sessions covering observability and how it is getting increasingly critical in the new complex distributed computing environment. Of course, there were many sessions, including all keynotes that addressed the advent and impact of AI on IT operations and observability.

|

By Mezmo

A few weeks ago, members of Mezmo were at Kubecon and attended several sessions. You can see a post with my recap and session highlights. Today, though, I’m going to discuss three sessions that my colleagues found interesting for our peers in Observability.

|

By Mezmo

In April, we published a Part I blog on the topic of how we planned to use our own Telemetry Pipelines product here at Mezmo to manage metrics data. Remember that utopia of observability we dreamed about? Well, we’re not quite there yet, but we’ve made progress.

|

By Mezmo

I had a fantastic opportunity to sit with Ben Good of Google and Rich Prillinger of Mezmo and participate in the discussion about the new DORA 2024 report. The 10th edition of the DORA report covers the impact of AI on software development, explores platform engineering’s promises and challenges, and emphasizes developer experience and stable priorities for success.

|

By Mezmo

Every day, telemetry data is emitted, processed, and then either stored or deleted from services. Unfortunately, understanding and then optimizing the telemetry data can be difficult. These difficulties lead to higher MTTR/D, burnout, and unwanted overage bills.

|

By Mezmo

Google recently released its 2024 Cloud DORA (DevOps Research and Assessment) report, bringing together a decade’s worth of trends, insights, and best practices on what drives high performance in software delivery across industries of all sizes. This year’s findings take a closer look at how DevOps teams can achieve greater resilience and efficiency by adopting AI, improving team well-being, and building powerful internal platforms.

|

By Mezmo

Managing telemetry data efficiently is a constant balancing act—how do you maximize visibility while controlling costs? In this webinar, we’ll show you how Mezmo’s telemetry pipeline helps you make smarter decisions about your data.

|

By Mezmo

Are you looking to enhance your observability and gain deeper insights into your systems? Curious about how a Telemetry Pipeline can revolutionize your monitoring and troubleshooting capabilities while keeping the cost low? Join Mezmo’s Bill Balnave (Vice President of Technical Services) for an insightful webinar unraveling Telemetry Pipeline’s key concepts, highlighting its significance in modern software development and operations. Discover how a Telemetry Pipeline enables you to collect, profile, transform, and analyze crucial telemetry data from your applications and infrastructure.

|

By Mezmo

Watch our discussion on the 2024 DORA Accelerate State of DevOps report, where we dive into insights impacting software delivery, organizational strategy, and AI adoption in DevOps. We’ll review key findings and highlight practical steps for leaders to optimize development and delivery performance. Whether your organization is embracing AI, building internal platforms, or addressing burnout and resilience, this webinar will provide actionable takeaways for adapting to today’s evolving DevOps landscape.

|

By Mezmo

In today's digital-first, cloud-native world, effective log management is crucial. It enhances software quality, operational efficiency, and the customer experience. However, with the rise of distributed and microservices-based architectures, organizations now generate petabytes of log data daily, making analysis and storage increasingly challenging.

|

By Mezmo

The exponential growth of telemetry data presents a significant challenge for organizations, who often overspend on data management without fully capitalizing on its potential value. To unlock the true potential of their telemetry data, organizations must treat it as a valuable enterprise asset, applying rigorous data engineering principles to glean the critical insights and accelerated investigations this data is meant to enable. The telemetry data platform approach democratizes access across disciplines and personas and fosters widespread utilization across the organization.

|

By Mezmo

Ensuring access to the right telemetry data - like logs, metrics, events, and traces from all applications and infrastructure are challenging in our distributed world. Teams struggle with various data management issues, such as security concerns, data egress costs, and compliance regulations to keep specific data within the enterprise. Mezmo Edge is a distributed telemetry pipeline that processes data securely in your environment based on your observability needs.

|

By Mezmo

Telemetry (Observability) pipelines play a critical role in controlling telemetry data (logs, metrics, events, and traces). However, the benefits of pipeline go well beyond log volume and cost reductions. In addition to using pipelines as pre-processors of data going to observability and SIEM systems, they can be used to support your compliance initiatives. This session will cover how enterprises can understand and optimize their data for log reduction while reducing compliance risk.

|

By Mezmo

Telemetry or observability data is overwhelming, but mastering the ever-growing deluge of logs, events, metrics, and traces can be transformative.

|

By Mezmo

Operational telemetry data, events, logs, and metrics produced by applications and infrastructure have enormous potential to help organizations maintain and improve operational efficiency and customer service. However, unlocking the value of telemetry data has become a challenge for enterprises.

|

By Mezmo

The explosion of telemetry data also massively increases your data bill. Teams also cannot control the data they do not understand and often lack the capabilities to act on it once it is understood. Mezmo makes it easier to understand and optimize your data. It helps reduce unnecessary noise and cost, and improve the quality of your data, so that your developers and engineers can consistently deliver on their service level objectives.

|

By Mezmo

Logging in the age of DevOps has become harder and more critical than ever because it is key to maintaining visibility and security in today's fast-moving, highly dynamic environments. With these needs and challenges in mind, Mezmo has prepared this eBook to offer guidance on how best to approach the log management challenges that teams face today.

|

By Mezmo

A growing number of log management solutions available on the market today are offered as cloud-only services. Although cloud logging has its benefits, many organizations have requirements that can only be fulfilled with self-hosted/on-premises log management systems.

|

By Mezmo

Here's a complete guide covering all core components to help you choose the best log management system for your organization. From scalability, deployment, compliance, and cost, to on-prem or cloud logging, we identify the key questions to ask as you evaluate log management and analysis providers.

|

By Mezmo

Despite having an extensive feature set and being open source, organizations are beginning to realize that a free ELK license is not free after all. Rather, it comes with many hidden costs due to hardware requirements and time constraints that easily add to the total cost of ownership (TCO). Here, we uncover the true cost of running the Elastic Stack on your own vs using a hosted log management service.

- March 2025 (1)

- February 2025 (2)

- January 2025 (1)

- December 2024 (4)

- November 2024 (6)

- October 2024 (3)

- September 2024 (5)

- August 2024 (4)

- July 2024 (4)

- June 2024 (5)

- May 2024 (4)

- April 2024 (6)

- March 2024 (1)

- February 2024 (2)

- January 2024 (2)

- December 2023 (5)

- November 2023 (2)

- October 2023 (5)

- September 2023 (1)

- July 2023 (1)

- June 2023 (4)

- May 2023 (1)

- April 2023 (8)

- March 2023 (2)

- February 2023 (6)

- January 2023 (4)

- December 2022 (3)

- November 2022 (4)

- October 2022 (3)

- September 2022 (1)

- August 2022 (2)

- July 2022 (2)

- June 2022 (4)

- May 2022 (1)

- April 2022 (3)

- March 2022 (2)

- February 2022 (2)

- January 2022 (3)

- December 2021 (7)

- November 2021 (4)

- October 2021 (11)

- September 2021 (4)

- August 2021 (5)

- July 2021 (6)

- June 2021 (7)

- May 2021 (9)

- April 2021 (3)

- March 2021 (6)

- January 2021 (1)

- November 2020 (2)

- October 2020 (2)

- September 2020 (3)

- August 2020 (5)

- July 2020 (9)

- June 2020 (8)

- May 2020 (3)

- April 2020 (2)

- March 2020 (1)

- February 2020 (1)

- January 2020 (4)

- November 2019 (3)

- October 2019 (4)

- September 2019 (1)

- August 2019 (2)

- July 2019 (7)

- June 2019 (5)

- May 2019 (7)

- April 2019 (9)

- March 2019 (4)

- February 2019 (8)

- January 2019 (9)

- December 2018 (8)

- November 2018 (12)

- October 2018 (4)

- September 2018 (1)

- July 2018 (3)

- May 2018 (2)

- April 2018 (3)

- July 2017 (1)

Log Management Modernized. Instantly collect, centralize, and analyze logs in real-time from any platform, at any volume.

Why Mezmo?

- Powerful Logging at Scale: Get powerful log aggregation, auto-parsing, log monitoring, blazing fast search, custom alerts, graphs, visualization, and a real-time log analyzer in one suite of tools. We handle hundreds of thousands of log events per second, and 20+ terabytes per customer, per day and boast the fastest live tail in the industry. Whether you run 1 or 100,000 containers, we scale with you.

- Easy, Instant Setup: Mezmo's SaaS log management platform sets up in under two minutes. Instantly collect logs from AWS, Docker, Heroku, Elastic, and more with the flexibility to deploy anywhere - cloud, multi-cloud, or self-hosted. Logging in Kubernetes? Logs start flowing in just 2 kubectl commands. Whether you wish to send logs via Syslog, Code library, or agent, we have hundreds of custom integrations.

- Affordable: Mezmo’s simple, pay-per-GB pricing model eliminates contracts, paywalls, and fixed data buckets. Try our free plan, or only pay for the data you use with no overage charges or data limits. Our user-friendly, frustration-free interface allows your team to get started with no special training required, saving even more time and money.

- Secure & Compliant: Our military grade encryption ensures your logs are fully secure in transit and storage. We offer SOC2, PCI, and HIPAA-compliant logging. To comply with GDPR for our EU/Swiss customers, we are Privacy Shield certified. The privacy and security of your log data is always our top priority, and we are ready to sign Business Associate Agreements.

Blazing fast, centralized log management that's intuitive, affordable, and scalable.