Operations | Monitoring | ITSM | DevOps | Cloud

January 2024

Introducing ManageEngine DDI: The key to unlocking the full potential of your critical network infrastructure

How to get SharePoint Online Library Size

A practical approach to on-call compensation

Cloudsmith Navigator: The Trusted Guide to OSS Package Quality

Product discovery in action | Atlassian Presents: Unleash | Atlassian

Still running Ubuntu 18.04? What you need to know

Driving into 2024 - The automotive trends to look out for in the year ahead

Supercharge Your Azure Savings Strategy with Azure Dev/Test Subscription

Enhancing Service Reliability: Uniting Rootly's Incident Management and Backstage's Software Catalog

#017 - Kubernetes for Humans Podcast with Nilesh Gule (DBS Bank)

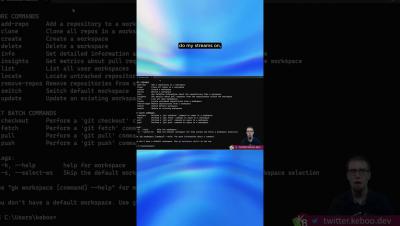

GitKraken Workshop: Making Sense of Multi-Repo Madness

8 Powerful CLI Extensions on GitHub in 2024

Zurich, a new low-carbon-powered public region, now available on Platform.sh

Advancing Platform Engineering with Northflank and Civo

Best Programming Languages for DevOps in 2024

Simple. Streamlined. Secure. Effortless user management has arrived with Teams

Introduction to Charmed Spark, A cloud-native Apache Spark solution on Kubernetes

Chaos To Control: Incident Management Process, Best Practices And Steps

The Pulse Of Technology: Why IT Monitoring Is Non-Negotiable In 2024

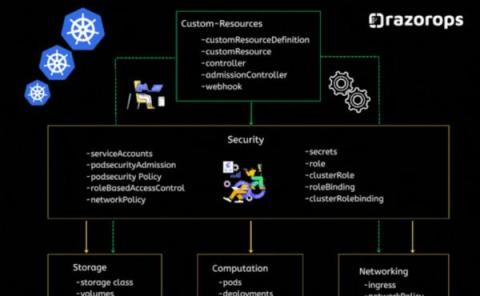

Kubernetes Tutorial for Developers

AI on-prem: what should you know?

Making Informed Software Selection Decisions: MCDA and DMA Compared

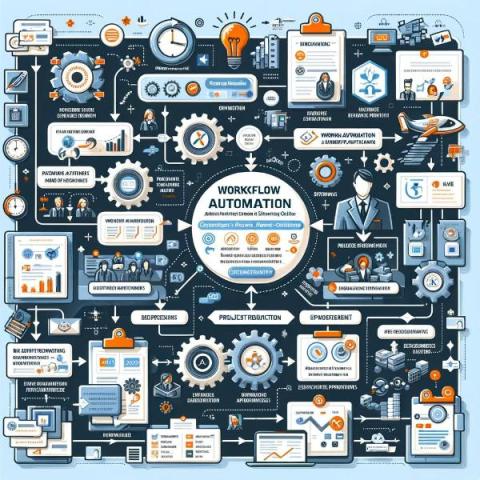

Mastering Workflow Automation in SharePoint Online

Managing the Talent Gap

Device Enrollment & 4 Common Methods

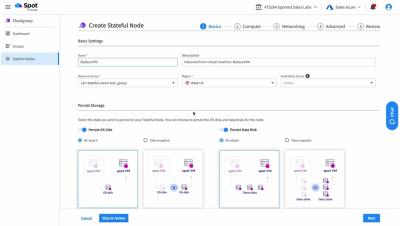

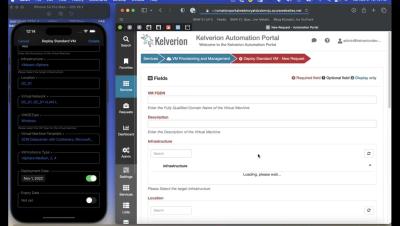

How to import an existing stateful Azure VM

How to spin up stateful Azure workloads

Cloudsmith Navigator

What can language models actually do well? | #GitKraken CTO at #Dockercon #shorts

Micrometer: The Gold Standard in Observability

Team DevOps effectiveness scorecard overview | Atlassian Analytics Demos | Atlassian

Organization DevOps effectiveness scorecard overview | Atlassian Analytics Demos | Atlassian

Cross-team DevOps effectiveness scorecard overview | Atlassian Analytics Demos | Atlassian

Secure your stack with Ubuntu

Are Multi-Cloud Environments Getting Denser in 2024?

Global Executives Embrace Value Stream Management as Key Driver for Long-Term Customer Value, Reveals New Survey

System Reliability Metrics: A Comparative Guide to MTTR, MTBF, MTTD, and MTTF

On-Demand Webcast: Unleashing FinOps

21+ DevOps Monitoring Tools Vital To Success

How To Maximize Free Cloud Resources Without Overstepping

Announcing the Mattermost Trustcenter

The Debrief: Why we killed our Slackbot and bought incident.io with Michael Cullum of Bud Financial

What to expect from Civo in 2024: GPUs, Navigate, and ML

What's new with AWS for 2024

Seven Jellyfish alternatives driving engineering efficiency and impact

Reliability At Your Fingertips | Squadcast

Create Follow the sun Oncall model

What is a Failover Cluster? How It Works & Applications

How Organizations Hire SRE's- Laterals or Internal?

How to Optimize Your AWS Costs in 2024: Top Vendors, Tips, and More

5 ways platform engineers can help developers create winning APIs

Maintaining Security with DevOps Compliance

Empowering Productivity: Unleashing the Full Potential of Device Management Solutions

The testing pyramid: Strategic software testing for Agile teams

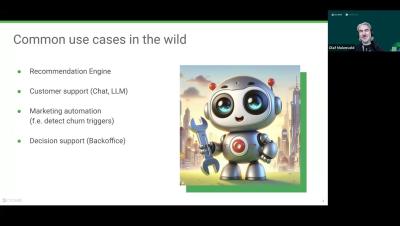

Integrating AI and DevOps for Software Development Teams

The Top 25 Vendor Selection Software and Tools in 2024

Improving Observability With Cloudsmith Logs

How to make the most of Azure Integration Account cost savings?

Basics of Backup and Recovery

Role of Human Oversight in AI-Driven Incident Management and SRE

Blameless CommsAssist - 3 Tips on Making Incident Communication Easy

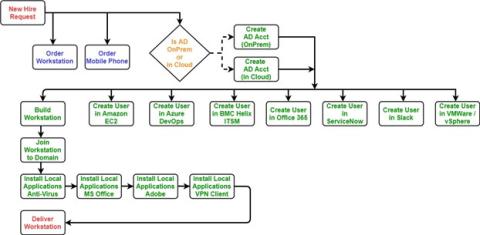

Evolve your Employee Onboarding Process with Automation

AI Explainer: Demystifying Embeddings

DevOps Insights with Dennis: Efficient DevOps - GitLab CI/CD for Docker Builds and ArgoCD Deployments on Kubernetes

What is Bash Scripting? Tutorial and Tips

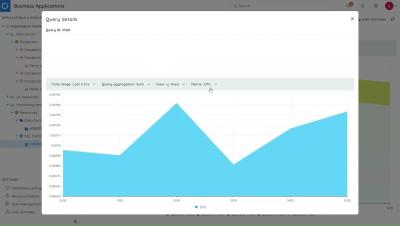

Datadog Software Delivery Demo

How to Uninstall AirDroid Kids on Kid's Device | AirDroid Parental Control

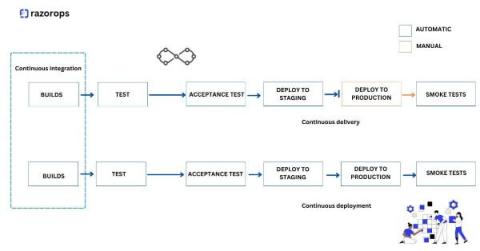

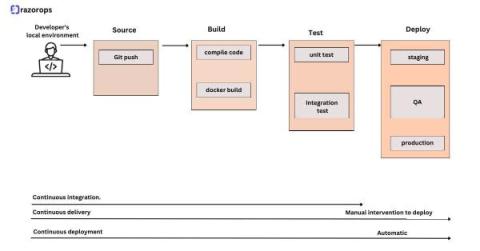

What Is Continuous Delivery and How Does It Work?

Product Insights - What's new and upcoming plans | Netdata Office Hours #13

10 Top Kubernetes Alternatives (And Should You Switch?)

The need for speed: achieving high data rates from data centre to cloud

Confused by Kubernetes Multi-Tenancy? A Workshop with Dario Tranchitella - Navigate Europe 23

How to build DevOps automations with Kosli Actions

Canonical's recipe for High Performance Computing

LLM hallucinations: How to detect and prevent them with CI

Azure Storage cost optimization to achieve maximum cost savings

Azure Unit Economics for Crafting a Financially Sound Strategy

#016 - Kubernetes for Humans Podcast with Marcelo Quadros & Juliano Martins (Mercado Libre)

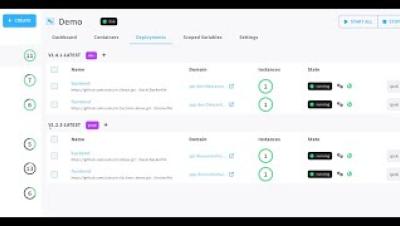

NEW FEATURE: Rainbow Deployments with Zero Downtime! (Jan 24 2024)

Monitor Heroku Add-Ons Using Hosted Graphite

Introducing Rainbow Deployments with Zero Downtime

How Squadcast Helps With Flapping Alerts

Puppet Extends Compliance Enforcement to Support Open-Source Puppet Users in Meeting CIS Benchmarks

The 25 Most Crucial Software Engineering Tools In 2024

Kubernetes Vs. Openshift

The Financial Services Automation Toolkit for Orchestrating Existing Automations with ITPA

Internet Exchanges and Peering: Unlocking the Benefits

SysAdmin's guide to migrating from CentOS

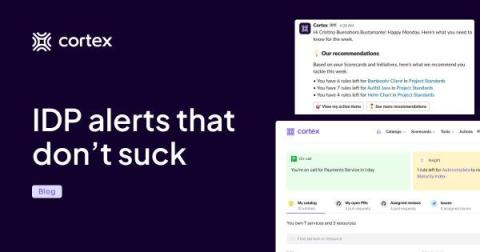

Cortex Notifications: Stay up to date while staying in flow

3 Reasons to Migrate from Ansible to Puppet

Tanzu Talk - Developer Platforms and Productivity, with Serdar Badem

Transforming DevOps with IaC and GitOps - John Dietz & Jared Edwards - Navigate Europe 23

Step-by-step Guide for Monitoring Redis Using Telegraf and MetricFire

Simplifying Service Dependency With Squadcast's Service Graph

AirDroid Personal VS. AirDroid Cast: What's the Difference?

Cloud Scaling: Secrets to Stability + Security When Scaling Cloud Computing

What To Do When A Customer (Or Segment) Is Costing Your SaaS Business Too Much

Streamlining Cloud Costs With Smart Management Strategies

What is microservices architecture?

What Is Kubernetes? What You Need To Know As A Developer

"Our job is not to write code." | #GitKraken CTO Eric Amodio at #Dockercon

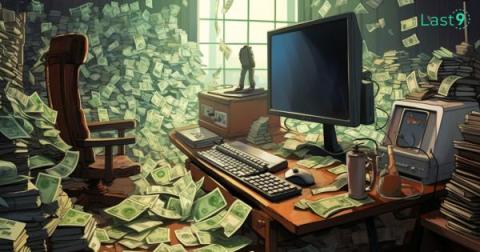

The Frugal Architect, Law I: Make Cost A Non-Functional Requirement

How Gremlin's dependency discovery feature works

The Debrief: Building AI-Related Incidents

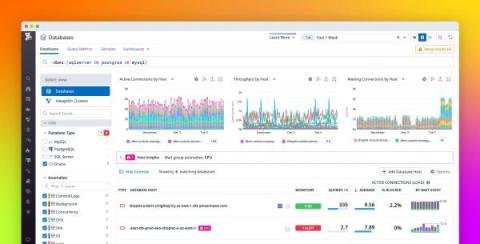

Monitor Oracle managed databases with Datadog DBM

Azure VM Autoscaling to enhance performance and cost efficiency

Understanding Cardinality with Levitate's Cardinality Explorer

Digital Realty and Console Connect Collaborate for Greater Interconnectivity

AirDroid Personal Remote Control FAQs Part 1

#shorts #airdroid #remotecontrol #controlandroidonpc #remotecontrolandroid #controlandroidfromanotherandroid #remotecontrolandroidfromiphone

How to build an Internal Developer Platform on Kubernetes

What is Hyper-V? Key Features and Capabilities

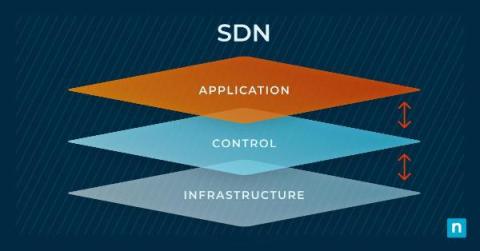

What is Software-Defined Networking (SDN)?

8 Common Network Issues & How to Address Them

Understanding the Role of a DevOps Engineer

How to make CI Pipelines better, explained by Solomon Hykes

NGINX Access and Error Logs

Finding relationships in your data with embeddings

Coming Soon: Cloudsmith Migration Toolkit

Real Production Readiness with Internal Developer Portals

Analyze Your Mailchimp Campaigns Using Telegraf

Conceptual Pillars Of Kubernetes

What's New in Serverless360: Databricks monitoring, Azure Cost group notifications, etc..

Building a GPT-style Assistant for historical incident analysis

The Debrief: incident.io, say hello to AI

Preview: Legal Holds in Mattermost

Terraform Time | Distribute PagerDuty config utilising Terraform Remote State

Mattermost v9.4: IP filtering, bring your own key & cloud-native compliance exports in Mattermost Cloud Enterprise

Mattermost v9.4 includes several new features designed to significantly enhance digital security and compliance, including the introduction of IP filtering, bring your own key (BYOK) for data control, and cloud-native compliance export. IP filtering tightens access control, BYOK offers greater data protection through personalized encryption, and streamlined compliance reporting ensures adherence to regulatory standards.

Why Spain Should Be Your Next Network Expansion Target

This European country is poised to make its mark in the cloud networking industry. Here’s why it should be the next addition to your enterprise network.

The alert fatigue dilemma: A call for change in how we manage on-call

Once the unsung heroes of the digital realm, engineers are now caught in a cycle of perpetual interruptions thanks to alerting systems that haven't kept pace with evolving needs. A constant stream of notifications has turned on-call duty into a source of frustration, stress, and poor work-life balance. In 2021, 83% percent of software engineers surveyed reported feelings of burnout from high workloads, inefficient processes, and unclear goals and targets.

5 Reasons Why GitKraken Client is the Ultimate Tool for GitHub Users

Experience Omni Dev with CodeZero with Narayan Sainaney - Navigate Europe 23

How to Start Contributing to Open Source Project with Mauricio Salatino

The Benefits of Using DCIM Software for Data Center Cable Management

New CNCF Survey Highlights GitOps Adoption Trends - 91% of Respondents Are Already Onboard

How to Monitor Your RabbitMQ Performance Using Telegraf

Stages of a CI/CD Pipeline

Rancher Live: Spotlight on Kubernetes v1.29

Lessons learned from building our first AI product

Does Every Incident Need a Retrospective? Here's What the Experts Have to Say

Every quarter, we host a roundtable discussion centered around the challenges encountered by incident responders at the world’s leading organizations. These discussions are lightly facilitated and vendor-agnostic, with a carefully curated group of experts. Everyone brings their own unique perspective and experience to the group as we dive deep into the real-world challenges incident responders are facing today.

From Git to Deployed - Fast Platform Delivery - Colin Griffin & Dinesh Majrekar - Navigate Europe 23

Kelsey Hightower on retiring as a Distinguished Engineer from Google at 42

Continuous Compliance Content Hub

The Continuous Compliance content hub is a set of guides for DevOps teams who need to move fast while remaining in compliance for audit and security purposes. We know that the old change management processes for software releases that happened once every 6 months don’t scale for DevOps teams who want to deploy every day. This is where Continuous Compliance comes in.

Effortlessly keep EKS AMI up to date with Spot Ocean

When CloudWatch Won't Do: 15 Best CloudWatch Alternatives In 2024

Test-driven development (TDD) explained

Ep. 12: Let's talk serverless: A discussion with AWS hero Yan Cui

What is GitHub? + Powerful Integrations #shorts #GitHub #GitKraken

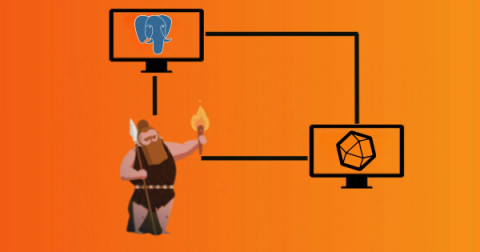

How to Monitor PostgreSQL With Telegraf and MetricFire

Monitoring-as-Code for Scaling Observability

As data volumes continue to grow and observability plays an ever-greater role in ensuring optimal website and application performance, responsibility for end-user experience is shifting left. This can create a messy situation with hundreds of R&D members from back-end engineers, front-end teams as well as DevOps and SREs, all shipping data and creating their own dashboards and alerts.

How To Troubleshoot False Alerts in Netreo

DevOps Change Management Resources

IaaS Providers Making Early Changes in 2024

A new year has started and some of the major IaaS providers are making major changes early on. AWS and GCP have both announced major changes that might be a signal for what's to come this year.

Software Selection: Your Company's Future Depends on Getting it Right

What is Git ?

Unleashing the power of AI and automation for effective Cloud Cost Optimization in 2024

Collecting OpenShift container logs using Red Hat's OpenShift Logging Operator

Q&A: What IT Automation Best Practices Should You Know Right Now? - Part 2

With a limitless load of questions on IT automation and the industry’s biggest trends, Resolve’s “Ask Me Anything (AMA)” session went about tackling them in an all-new way. We threw out the preparation, we threw out the scripts, and we asked our community to submit the questions that matter most to them and their organizations. Part of our leadership team took the hot seat and provided answers in real time, sans dress rehearsal.

8 Strategies for Reducing Alert Fatigue

Site Reliability Engineers (SREs) and DevOps teams often deal with alert fatigue. It's like when you get too alert that it's hard to keep up, making it tougher to respond quickly and adding extra stress to the current responsibilities. According to a study, 62% of participants noted that alert fatigue played a role in employee turnover, while 60% reported that it resulted in internal conflicts within their organization.

Supercharged with AI

Take control of user management with Cloudsmith's new SCIM capabilities

Cloudsmith announces expanded support for System for Cross-domain Identity Management (SCIM) for user management and enhanced software supply chain security.

GitHub Pull Request Management with GitKraken Client

Let’s dive into the world of pull requests (PRs). They’re the bridges connecting your hard work to the bigger project, facilitating code review, collaboration, and more. But why are they so crucial, and how can tools like GitKraken Client and GitHub take their management to the next level? Keep reading to explore the unique features of both platforms, plus time-saving tips for efficient PR management.

EKS Add-ons And Integrations: Evaluating Cost Impacts

The Catchpoint 2024 SRE Report - Five Key Takeaways

Mattermost v9.4 Changelog

Easily Monitor URL and IP Availability Using Telegraf with Ping

Unlock the Secrets of Machine Learning: A Beginner's Guide with Josh Mesout - Navigate Europe 23

Azure Resource Monitoring: Setting Up Key Metrics Made Simple!

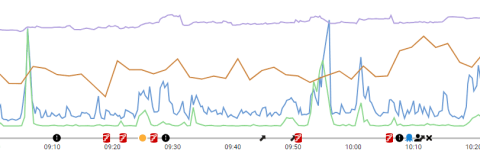

How to monitor Azure Automation Runbooks?

Identify and rectify network issues proactively with the OpManager-Jira integration

Does Anonymous Web Hosting Really Make You Anonymous?

Marking deployments and more in Redgate Monitor

Mastering IT Alerting: A Short Guide for DevOps Engineers

$575 million was the cost of a huge IT incident that hit Equifax, one of the largest credit reporting agencies in the U.S. In September 2017, Equifax announced a data breach that impacted approximately 147 million consumers. The breach occurred due to a vulnerability in the Apache Struts web application framework, which Equifax failed to patch in time. This vulnerability allowed hackers to access the company's systems and exfiltrate sensitive data.

Debugging Go compiler performance in a large codebase

As we’ve talked about before, our app is a monolith: all our backend code lives together and gets compiled into a single binary. One of the reasons I prefer monolithic architectures is that they make it much easier to focus on shipping features without having to spend much time thinking about where code should live and how to get all the data you need together quickly. However, I’m not going to claim there aren’t disadvantages too. One of those is compile times.

Managing software in complex network environments: the Snap Store Proxy

What is Infrastructure as Code (IaC)?

5 Ways CloudZero Found Savings Using Its Own Platform

"The first versions of Copilot were just...comically bad." | GitKraken's Eric Amodio at #Dockercon

Enhancing Data Center Efficiency and Sustainability with Power Capacity Effectiveness

PCE is a performance metric that evaluates the effective utilization of power in data centers. It measures the ratio of IT equipment power to the total power consumed, encompassing all aspects of the facility’s operations, including cooling and lighting. Unlike traditional metrics, PCE provides a comprehensive assessment of how power is used within data centers, aiming to optimize the actual power capacity available.

Supercharge FinOps Programs with Resource Guardrails

Time and time again we hear the same statements from FinOps teams with respect to what is holding back optimization of wasteful cloud resource consumption. Engineers and App Owners are interested in helping but stop short at actually taking actions to reduce that waste. There are many reasons for this main sticking point when it comes to application owners and developers taking action.

Building Controllers with Python Made Easy with Steve Giguere - Navigate Europe 23

Why Kubernetes For Developers is the Next Big Thing

What is RMM software?

Azure App Service Pricing (2024)

Supercharge FinOps Programs with Cloud Resource Optimization

Understanding ISO27001 Security - and why DevOps teams choose Kosli

Modern software delivery teams find themselves under constant pressure to maintain security and compliance without slowing down the speed of development. This usually means that they have to find a way of using automation to ensure robust governance processes that can adapt to evolving cyber threats and new regulatory requirements.

A Guide to Continuous Security Monitoring Tools for DevOps

DevOps has accelerated the delivery of software, but it has also made it more difficult to stay on top of compliance issues and security threats. When applications, environments and infrastructure are constantly changing it becomes increasingly difficult to maintain a handle on compliance and security. For fast-moving teams, real time security monitoring has become essential for quickly identifying risky changes so they can be remediated before they result in security failure.

Non-Abstract Large System Design (NALSD): The Ultimate Guide

Non-Abstract Large System Design (NALSD) is an approach where intricate systems are crafted with precision and purpose. It holds particular importance for Site Reliability Engineers (SREs) due to its inherent alignment with the core principles and goals of SRE practices. It improves the reliability of systems, allows for scalable architectures, optimizes performance, encourages fault tolerance, streamlines the processes of monitoring and debugging, and enables efficient incident response.

Set Resource Requests and Limits Correctly: A Kubernetes Guide

Kubernetes has revolutionized the world of container orchestration, enabling organizations to deploy and manage applications at scale with unprecedented ease and flexibility. Yet, with great power comes great responsibility, and one of the key responsibilities in the Kubernetes ecosystem is resource management. Ensuring that your applications receive the right amount of CPU and memory resources is a fundamental task that impacts the stability and performance of your entire cluster.

Introducing GitOps Versions: A Unified Way to Version Your Argo CD Applications

Last month, we announced our new GitOps Environment dashboard that finally allows you to promote Argo CD applications easily between different environments.

Data Center Liquid Cooling 101

As rack densities in data centers increase to support power-hungry applications like Artificial Intelligence and high-performance compute (HPC), data center professionals struggle with the limited cooling capacity and energy efficiency of traditional air cooling systems. In response, a potential solution has emerged in liquid cooling, a paradigm shift from traditional air-based methods that offers a more efficient and targeted approach to thermal management.

Protect Against Netscaler Vulnerability CitrixBleed

Cloud-native infrastructure - When the future meets the present

What's in store for AI in 2024 with Patrick Debois

Transform Your Customer Experience with DevOps Collaboration

Prompt engineering: A guide to improving LLM performance

Prompt engineering is the practice of crafting input queries or instructions to elicit more accurate and desirable outputs from large language models (LLMs). It is a crucial skill for working with artificial intelligence (AI) applications, helping developers achieve better results from language models. Prompt engineering involves strategically shaping input prompts, exploring the nuances of language, and experimenting with diverse prompts to fine-tune model output and address potential biases.

How to Build an App with Spin and Wasm with Matt Butcher & Saiyam Pathak - Navigate Europe 23

Scaling Down Kubernetes Clusters

IoT Monitoring Challenges

Cyber Security - The Beacon of Trust

As John Lennon once said, another year over…and a new one just begun. As we head into 2024, it’s important to reflect on what we’ve seen and where we need to focus in the year ahead.

Rancher Vs. OpenShift

The Last Mile of Observability - Fine-Tuning Notifications for More Timely Alerts

No one wants to get an alert in the middle of the night. No one wants their Slack flooded to the point of opting out from channels. And indeed, no one wants an urgent alert to be ignored, spiraling into an outage. Getting the right alert to the right person through the right channel — with the goal of initiating immediate action — is the last mile of observability.

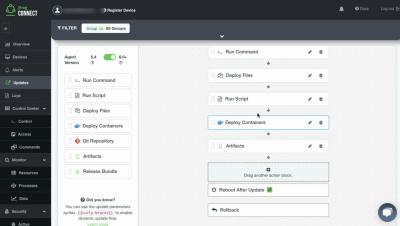

IoT Management with JFrog Connect (5-Minute Demo)

Cloudsmith x PagerDuty

Azure Automation is more than just PowerShell

Azure Automation is a powerful IT Automation service in use by thousands of organisations. Many organisations are using Azure Automation just as a PowerShell runbook execution service and are unaware of its wider capabilities.

How Can You Navigate the CNCF Ecosystem? Insights from Kunal Kushwaha - Navigate Europe 23

User Panel discussion! | Netdata Office Hours #12

The SaaS Revenue Model: 5 Types To Consider

What is Kiosk Mode? Features and Use Cases

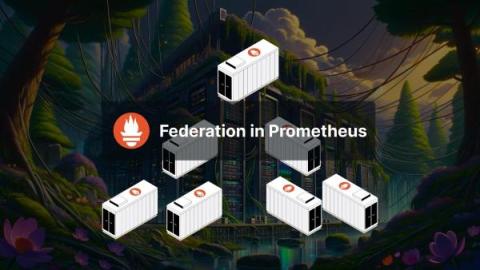

Prometheus Federation Scaling Prometheus Guide

What is a sovereign cloud?

High Performance Computing - It's all about the bottleneck

Database monitoring and optimization | PostgreSQL 101

Introduction to Multi-Version Concurrency Control and Vacuum | PostgreSQL 101

Q&A: IT Automation Best Practices for 2024, Part One

Editor’s Note: This blog is the first of a two-part series that recaps our first-ever “Ask Me Anything (AMA)” session. Part 2, to include questions 5-9, is set to publish next Tuesday. Seems like there’s an overload of burning, tough questions surrounding IT automation and orchestration, doesn’t it?

Is YAML Essential for Kubernetes? Engin Diri Explores Alternatives - Navigate Europe 2023

What are networks?

Receive zipped messages (or files) in BizTalk Server Solutions

SLOs with Prometheus done wrong, wrong, wrong, wrong, then right

Easy Ways to Fix Unrecognized Database Format Error in MS Access

What Is A Cloud Engineer? Here's a Quick Breakdown

What is an Internal Developer Portal?

Managed GCP GKE Autopilot Released in Public Beta

5 open source projects to contribute to in 2024

Contributing to open source software helps you develop new skills, gain real-world coding experience, interact with new technologies, and meet new people. But with so many open source projects to choose from — developers started some 52 million new projects on GitHub in 2022 alone — it can be difficult to figure out which repositories to contribute to. If you’re thinking about joining a new open source project in 2024, you’ve come to the right place.

How Can Kubernetes Thrive in Regulated Environments? Insights from Ryan Gutwein - Navigate Europe 23

How to optimize your cloud infrastructure management

As on-premises infrastructure and workloads increasingly migrate to the cloud, you’ve undoubtedly encountered many challenges in managing complex cloud architectures. These hurdles include juggling cost-efficiency and security to maintain a seamless, high-performance infrastructure. Navigating your cloud infrastructure landscape requires thoroughly understanding its virtualized elements—servers, software, network devices, and storage.

Understanding roles in software operators

Succeeding with Teams Phone in 2024

Moving to Teams Phone as your primary voice system can save money and provide a great user experience, or it can “crash and burn”. In a two-part workshop, I had the opportunity to explore insights to help migrate successfully to Teams Phone with Greg Zweig of Ribbon. (Ribbon was kind enough to sponsor both workshop sessions.) This article summarizes the information we covered in the workshop.

Video analytics at the edge: How video processing benefits from edge computing

Computer vision: digital understanding of the physical world From face recognition to fire prevention, autonomous cars to medical diagnosis, the promise of video analytics has enticed technology innovators for years. Video analytics, the processing and analysing of visual data through machine learning and artificial intelligence, is perceived as a significant opportunity for edge computing.

EP2: The Unsustainable Truth: Data Centers' Dirty Little Secret w/ Dean Nelson

Monitor Amazon EC2: key metrics for instances, regions, and more in one view

Amazon EC2 was one of the first services available on AWS, helping propel the cloud platform into the mainstream of IT. And while EC2 instances come in a wide range of sizes and flavors to address all sorts of use cases, keeping tabs on those instances isn’t always easy. That’s why we’re excited to introduce our new EC2 monitoring solution in Grafana Cloud.

Effective strategies for managing cron jobs: Best practices and tools

Building a Custom Read-only Global Role with the Rancher Kubernetes API

In 2.8, Rancher added a new field to the GlobalRoles resource (inheritedClusterRoles), which allows users to grant permissions on all downstream clusters. With the addition of this field, it is now possible to create a custom global role that grants user-configurable permissions on all current and future downstream clusters. This post will outline how to create this role using the new Rancher Kubernetes API, which is currently the best-supported method to use this new feature.

Avoiding vendor lock-in with your IDP

Time-Saving GitKraken Client Features PART 1 #shorts

Time-Saving GitKraken Client Features PART 2 #shorts

The Unsustainable Truth: Data Centers' Dirty Little Secret

Learn more about how modern DCIM software is being used to measure the power capacity effectiveness (PCE) of data centers. Schedule a free one-on-one demo of Hyperview today.

Introducing Squadcast's Intelligent Alert Grouping and Snooze Notifications

The SRE Report 2024 Reveals State of Site Reliability Engineering

Mastering NGINX Monitoring: Comprehensive Guide to Essential Tools

NGINX, is a versatile open-source web server, reverse proxy, and load balancer, stands out for its exceptional performance and scalability. Monitoring Nginx is pivotal for maintaining its optimal functionality. By tracking and analysing performance, including real-time insights into server health, resource utilization, and user requests, administrators can proactively identify issues.

Tech trends to watch in 2024

The SRE Report 2024: Essential Considerations for Readers

If you Google, “What is the shortest, complete sentence in American English?”, then you may get, “I am” as the first answer. However, “Go” is also considered a grammatically correct sentence, and is shorter than, “I am”.

Observability trends and predictions for 2024: CI/CD observability is in. Spiking costs are out.

From AI to OTel, 2023 was a transformative year for open source observability. While the advancements we made in open source observability will be a catalyst for our continued work in 2024, there is even more innovation on the horizon. We asked seven Grafanistas to share their predictions for which observability trends are on their “In” list for 2024. Here’s what they had to say.

The role of the CI/CD pipeline in cloud computing

The Continuous Integration/Continuous Deployment (CI/CD) pipeline has evolved as a cornerstone in the fast-evolving world of software development, particularly in the field of cloud computing. This blog aims to demystify how CI/CD, a set of practices that streamline software development, enhances the agility and efficiency of cloud computing.

An Introduction to Civo Cloud - A Complete Guide with Field CTO Saiyam Pathak - Civo.com

Deploying A Database to Cycle

Shared File Systems on Cycle

Best practices for CI/CD monitoring

Modern-day engineering teams rely on continuous integration and continuous delivery (CI/CD) providers, such as GitHub Actions, GitLab, and Jenkins to build automated pipelines and testing tools that enable them to commit and deploy application code faster and more frequently.

Open Source Automation Tools: The Most Popular Options + How to Choose

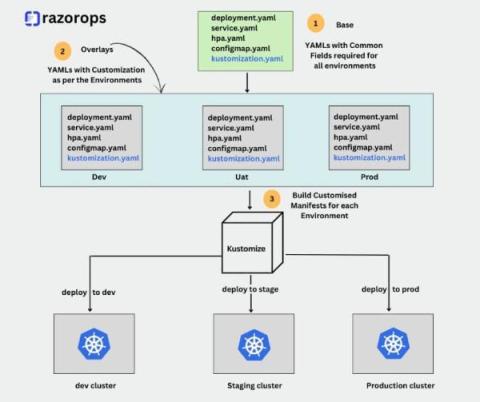

What is Kustomize ?

In the dynamic realm of container orchestration, Kubernetes stands tall as the go-to platform for managing and deploying containerized applications. However, as the complexity of applications and infrastructure grows, so does the challenge of efficiently managing configuration files. Enter Kustomize, a powerful tool designed to simplify and streamline Kubernetes configuration management.

BizTalk Server to Azure Integration Services: Send zipped messages (or files)

Team Komodor Does Klustered with David Flannagan (AKA Rawkode)

An elite DevOps team from Komodor takes on the Klustered challenge; can they fix a maliciously broken Kubernetes cluster using only the Komodor platform? Let’s find out! Watch Komodor’s Co-Founding CTO, Itiel Shwartz, and two engineers – Guy Menahem and Nir Shtein leverage the Continuous Kubernetes Reliability Platform that they’ve built to showcase how fast, effortless, and even fun, troubleshooting can be!

Kubernetes Networking: Understanding Services and Ingress

Within the dynamic landscape of container orchestration, Kubernetes stands as a transformative force, reshaping the landscape of deploying and managing containerized applications. At the core of Kubernetes' capabilities lies its sophisticated networking model, a resilient framework that facilitates seamless communication between microservices and orchestrates external access to applications. Among the foundational elements shaping this networking landscape are Kubernetes Services and Ingress.

How Squadcast's Workflows Enhance Incident Management Automation?

How to Calculate and Minimize Downtime Costs

Downtime is an unwelcome reality. But, beyond the immediate disruption, outages carry a significant financial burden, impacting revenue, customer satisfaction, and brand reputation. For SREs and IT professionals, understanding the cost of downtime is crucial to mitigating its impact and building a more resilient infrastructure.

Popular Kubernetes Distributions You Should Know About

In the realm of modern application deployment, orchestrating containers through Kubernetes is essential for achieving scalability and operational efficiency. This blog deals with diverse Kubernetes distribution platforms, each offering tailored solutions for organizations navigating the intricacies of containerized application management.

Webinar: "Is it Done Yet?" - Defining Production Readiness with Internal Developer Portals

AI in 2024 - What does the future hold?

Azure Not For You? Here Are 10 Azure Alternatives

A recap of 2023

Last year we decided to just keep our heads down and continue working on a good reliable product #bootstrapped. Most features we built were based on your feedback. Thank you so much. 2024 is going to be great but before that let's glance on the year gone.

Rancher Live: What's the buzz with Cilium?

Inside the CloudCheckr Well-Architected Readiness Advisor

Manage PRs, Issues & WIPs within your #Terminal | GitKraken CLI #shorts

Deployment Frequency: Why and How To Measure It

Announcing the Rancher Kubernetes API

It is our pleasure to introduce the first officially supported API with Rancher v2.8: the Rancher Kubernetes API, or RK-API for short. Since the introduction of Rancher v2.0, a publicly supported API has been one of our most requested features. The Rancher APIs, which you may recognize as v3 (Norman) or v1 (Steve), have never been officially supported and can only be automated using our Terraform Provider.

Inside Operator Day: Mastering software operators for Data and AI

Simplifying MongoDB Operations

Enlightning - Be a Security Hero with Kubescape as Your Sidekick

Revolution in Development: Mark Allen's Code Zero & Kubernetes Top Tips - Navigate Europe 23

Boost Your Software Deliveries with Docker and Kubernetes

Software delivery are paramount. The ability to swiftly deploy, manage, and scale applications can make a significant difference in staying ahead in the competitive tech industry. Enter Docker and Kubernetes, two revolutionary technologies that have transformed the way we develop, deploy, and manage software.

Shifting Left with FinOps: A Developer-Centric Approach to Managing Azure Costs

Speedscale vs. LocalStack for Realistic Mocks

What sets OpManager apart as reliable virtual server management software?

Cortex QuickStart

Taking the Gold Rush to the regions

2023 was the year of Artificial Intelligence (AI). 2024 will build on the incredible momentum of the likes of ChatGPT, Google Bard, Microsoft CoPilot, and others, delivering applications and services that apply AI to every industry imaginable. A recent analysis piece from Schroders makes the point well: “The mass adoption of generative Artificial Intelligence (AI) …has sparked interest akin to the Californian Gold Rush.”

Why your monitoring costs are high

Harmony in Chaos: Uniting Team Autonomy with End-to-End Observability for Business Success

Imagine a symphony where every musician plays their part flawlessly, but without a conductor to guide the orchestra, the result is just a discordant mess. Now apply that image to the modern IT landscape, where development and operations teams work with remarkable autonomy, each expertly playing their part. Agile methodologies and DevOps practices have empowered teams to build and manage their services independently, resulting in an environment that accelerates innovation and development.

Securing Software Development: Marino Wijay's Expert Insights - Navigate Europe 23

Terraform Time - Opening 2024 with PagerDuty via Terraform

Time for Some More Meaningful Data Center Metrics?

Our CEO, Jad Jebara joins Digitalisation World podcast to provide insights as to how the data center industry, prompted by the requirement for meaningful environmental reporting, can work towards a truly sustainable future by focusing on the metrics that matter. See first-hand how modern DCIM software is being used to manage hybrid IT environments. Schedule a free one-on-one demo of Hyperview today.

Rack PDU Management Trends for 2024

As data centers grow more complex and power-hungry, rack PDUs are an increasingly important component of data center power circuits. Modern intelligent rack PDUs have many advanced features and work seamlessly with Data Center Infrastructure Management (DCIM) software to provide a complete solution for monitoring and managing data center infrastructure. Let’s delve into the key trends shaping rack PDU management in 2024 and beyond.

Modernizing Financial Services with Automated, Proactive Threat Management

There’s a rising and intensifying pressure on financial services institutions that aligns with the demand for modernization, down to the core. It comes from laws like those of the Service Organization Control Type 2 (SOC 2) and the General Data Protection Regulation (GDRP), which enforce the need to build and hold down cybersecurity policies.

Empowerment without Clarity Is Chaos

The companies we work with at Tanzu by Broadcom are constantly looking for better, faster ways of developing and releasing quality software. But digital transformation means fundamentally changing the way you do business, a process that can be derailed by any number of obstacles. In his recent video series, my colleague Michael Coté identifies 14 reasons why it’s hard to change development practices in large organizations.

Client Testimonial - Carhartt

#GitHub Copilot Chat is out of Beta #shorts

Quick #Git Tip: Diffs #shorts

The Cost Benefits Of Using Scaling Within An EKS Cluster

VMware Tanzu vs Openshift

Cortex Quickstart

What's New in Docker 2023? Discover New Docker Features with Francesco Ciulla! - Navigate Europe 23

Portal Mobile App Demo

Top Use Cases for Mock Services in Software Development

Self-Hosted or SaaS, JFrog Has You Covered

Top 10 Platform Engineering Tools You Should Consider in 2024

Architecting For Cost In AWS: Design Patterns And Best Practices

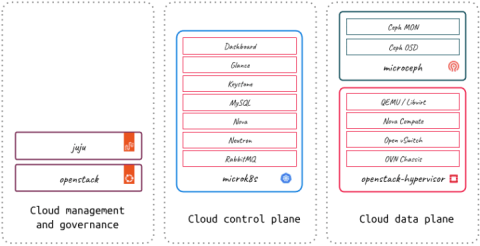

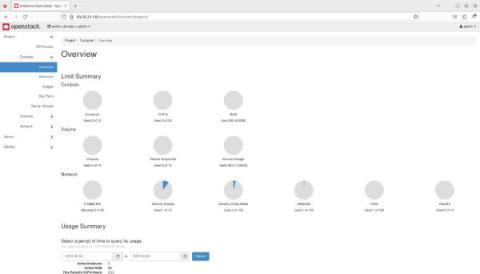

OpenStack with Sunbeam for small-scale private cloud infrastructure

Intro to GitKraken CLI

Getting into Product Management #shorts

Want to take the stress out of multi-repo management? | GitKraken Workspaces #shorts

Harnessing the Power of Metrics: Four Essential Use Cases for Pod Metrics

In the dynamic world of containerized applications, effective monitoring and optimization are crucial to ensure the efficient operation of Kubernetes clusters. Metrics give you valuable insights into the performance and resource utilization of pods, which are the fundamental units of deployment in Kubernetes. By harnessing the power of pod metrics, organizations can unlock numerous benefits, ranging from cost optimization to capacity planning and ensuring application availability.

Boosting Kubernetes Stability by Managing the Human Factor

As technology takes the driver’s seat in our lives, Kubernetes is taking center stage in IT operations. Google first introduced Kubernetes in 2014 to handle high-demand workloads. Today, it has become the go-to choice for cloud-native environments. Kubernetes’ primary purpose is to simplify the management of distributed systems and offer a smooth interface for handling containerized applications no matter where they’re deployed.

Striking the Balance: Tips for Enhancing Access Control and Enforcing Governance in Kubernetes

Kubernetes, with its robust, flexible, and extensible architecture, has rapidly become the standard for managing containerized applications at scale. However, Kubernetes presents its own unique set of access control and security challenges. Given its distributed and dynamic nature, Kubernetes necessitates a different model than traditional monolithic apps.

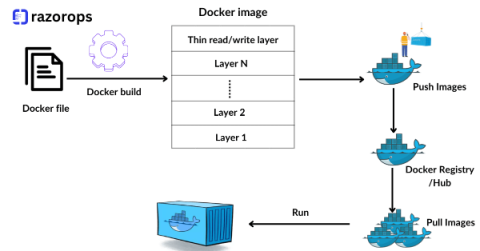

Docker File Best Practices For DevOps Engineer

Containerization has become a cornerstone of modern software development and deployment. Docker, a leading containerization platform, has revolutionized the way applications are built, shipped, and deployed. As a DevOps engineer, mastering Docker and understanding best practices for Dockerfile creation is essential for efficient and scalable containerized workflows. Let’s delve into some crucial best practices to optimize your Dockerfiles.

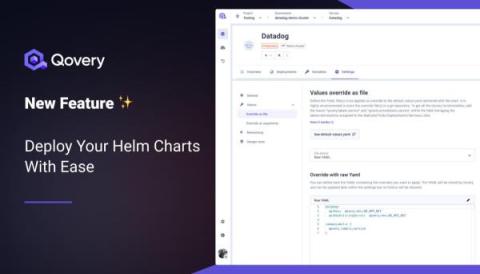

New Feature: Deploy Your Helm Charts With Ease

How to Fix the "DNS Server Not Responding" Error?

With the vast amount of data that is transmitted through the internet, it is essential to have a reliable connection. However, sometimes even the most stable connection can experience issues, one of which is the "DNS Server Not Responding" error. This error occurs when your device is unable to establish a connection with the DNS server, thereby depriving you of access to the internet.