Operations | Monitoring | ITSM | DevOps | Cloud

Logging

The latest News and Information on Log Management, Log Analytics and related technologies.

From Spotify to Open Source: The Backstory of Backstage

Technology juggernauts–despite their larger staffs and budgets–still face the “cognitive load” for DevOps that many organizations deal with day-to-day. That’s what led Spotify to build Backstage, which supports DevOps and platform engineering practices for the creation of developer portals.

Kubernetes | How to run ElasticSearch, Kafka and Logstash in Kubernetes

ChatGPT and Elasticsearch: APM instrumentation, performance, and cost analysis

In a previous blog post, we built a small Python application that queries Elasticsearch using a mix of vector search and BM25 to help find the most relevant results in a proprietary data set. The top hit is then passed to OpenAI, which answers the question for us. In this blog, we will instrument a Python application that uses OpenAI and analyze its performance, as well as the cost to run the application.

Amazon Security Lake & ChaosSearch deliver security analytics with industry-leading cost & unlimited retention

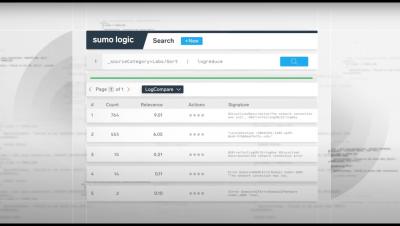

Top 10+ Best Log Monitoring Tools & Software: Free & Paid [2023 Comparison]

In the rapidly evolving digital landscape companies are facing an increasing number of challenges in maintaining their IT infrastructure, and ensuring application stability. It is critical to stay on top of all the information to ensure the health of the organization and the business side of it. One of the ways to achieve visibility is to use a log monitoring tool to centralize the log data coming from each application and infrastructure element.

What is Applied Observability?

Monitor your firewall logs with Datadog

Firewall systems are critical for protecting your network and devices from unauthorized traffic. There are several types of firewalls that you can deploy for your environment via hardware, software, or the cloud—and they all typically fall under one of two categories: network-based or host-based. Network-based firewalls monitor and filter traffic to and from your network, whereas host-based firewalls manage traffic to and from a specific host, such as a laptop.

Revolutionizing SAP observability: The Elastic-Kyndryl partnership

Across industries and geographies, businesses rely heavily on Systems Applications and Products (SAP) systems. These powerful and versatile systems streamline operations and manage critical data spanning areas like finance, human resources, and supply chain. However, the real-time monitoring of these systems, with an in-depth understanding of performance metrics and quick anomaly detection, is paramount for smooth operations and business continuity. It's here that our unique offering steps in.

Are Your Data Pipelines Up to Commercial Standards?

In the data business, we often refer to the series of steps or processes used to collect, transform, and analyze data as “pipelines.” As a data scientist, I find this analogy fitting, as my concerns around data closely mirror those most people have with water: Where is it coming from? What’s in it? How can we optimize its quality, quantity, and pressure for its intended use? And, crucially, is it leaking anywhere?