How to Build a Kafka-Spark-Solr Data Analytics Platform Using Deployment Blueprints

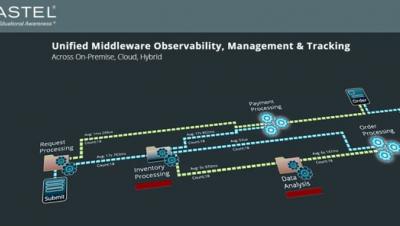

Enterprise applications rely on large amounts of data that needs to be distributed, processed, and stored. Data platforms offer data management services via a combination of open source and commercially supported software stacks. These services enable accelerated development and deployment of data-hungry business applications. Building a containerized data analytics platform comprising different software stacks comes with several deployment challenges.