Collecting Kafka performance metrics

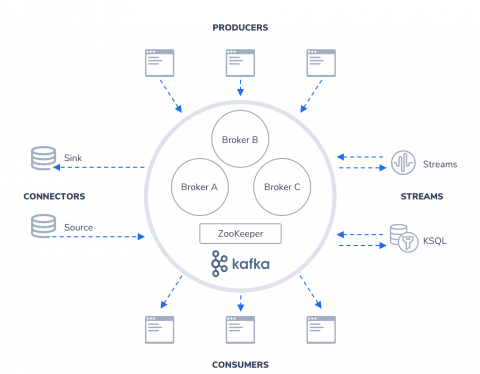

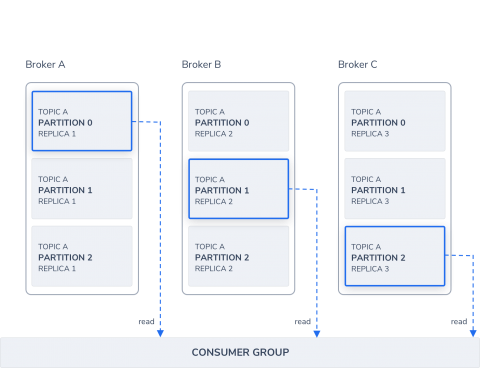

If you’ve already read our guide to key Kafka performance metrics, you’ve seen that Kafka provides a vast array of metrics on performance and resource utilization, which are available in a number of different ways. You’ve also seen that no Kafka performance monitoring solution is complete without also monitoring ZooKeeper. This post covers some different options for collecting Kafka and ZooKeeper metrics, depending on your needs.