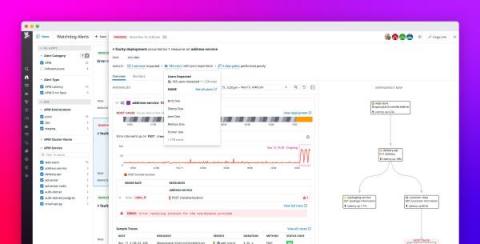

How to Deploy the Splunk OpenTelemetry Collector to Gather Kubernetes Metrics

With Kubernetes emerging as a strong choice for container orchestration for many organizations, monitoring in Kubernetes environments is essential to application performance. Kubernetes allows developers to develop applications using distributed microservices introducing new challenges not present with traditional monolithic environments. Understanding your microservices environment requires understanding how requests traverse between different layers of the stack and across multiple services.