How Telemetry Pipelines Save Your Budget

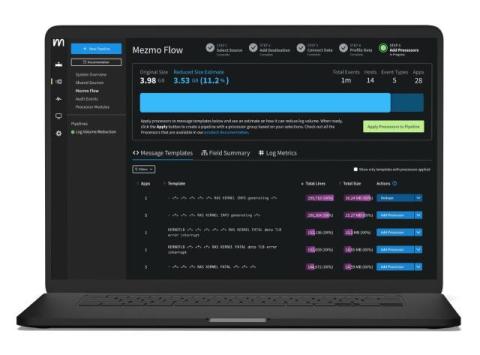

This is an updated version of an earlier blog post to reflect current definitions of a telemetry pipeline and additional capabilities available in Mezmo Our recent blog post about observability pipelines highlighted how they centralize and enable telemetry data actionability. A key benefit of telemetry pipelines is users don't have to compare data sets manually or rely on batch processing to derive insights, which can be done directly while the data is in motion.