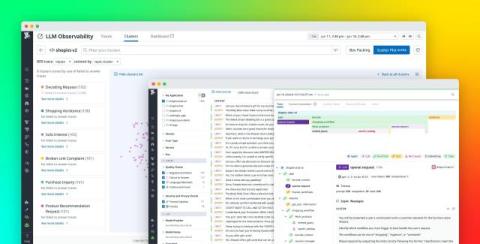

Get granular LLM observability by instrumenting your LLM chains

The proliferation of managed LLM services like OpenAI, Amazon Bedrock, and Anthropic have introduced a wealth of possibilities for generative AI applications. Application engineers are increasingly creating chain-based architectures and using prompt engineering techniques to build LLM applications for their specific use cases.