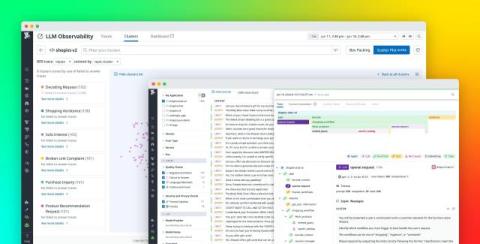

Monitor, troubleshoot, improve, and secure your LLM applications with Datadog LLM Observability

Organizations across all industries are racing to adopt LLMs and integrate generative AI into their offerings. LLMs have been demonstrably useful for intelligent assistants, AIOps, and natural language query interfaces, among many other use cases. However, running them in production and at an enterprise scale presents many challenges.