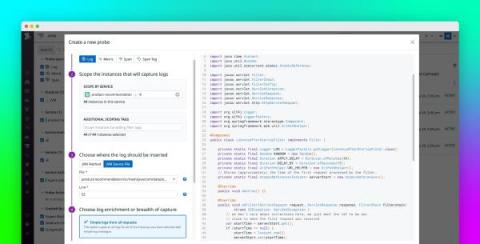

Use Datadog Dynamic Instrumentation to add application logs without redeploying

Modern distributed applications are composed of potentially hundreds of disparate services, all containing code from different internal development teams as well as from third-party libraries and frameworks with limited external visibility. Instrumenting your code is essential for ensuring the operational excellence of all these different services. However, keeping your instrumentation up to date can be challenging when new issues arise outside the scope of your existing logs.