Kafka Migration and Lessons Learned

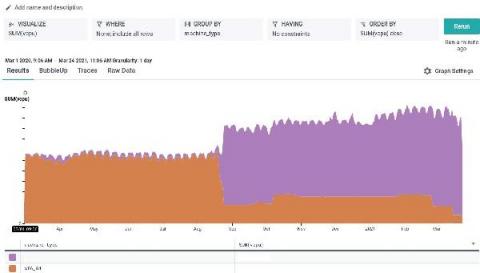

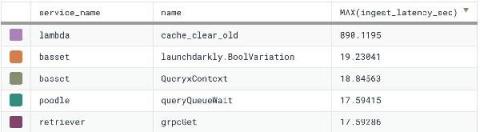

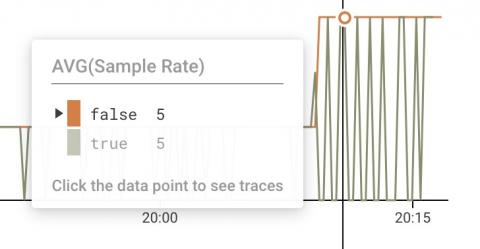

Over the last few months, Honeycomb’s platform team migrated to a new iteration of our ingest pipeline for customer events. Our migration to this newer architecture did not go too smoothly, as can be attested by our status page since February. There were also many near-incidents where we got paged and reacted quickly enough to avoid major issues. We’ve decided to write a full overview of all the challenges we had encountered, which you can can download.