AWS Lambda Extensions: What are they and why do they matter

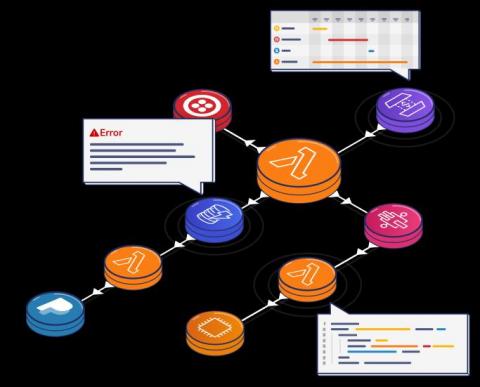

There is a growing ecosystem of vendors that are helping AWS customers gain better observability into their serverless applications. All of them have been facing the same struggle: how to collect telemetry data about AWS Lambda functions in a way that’s both performant and cost-efficient. To address this need, Amazon is announcing today the release of AWS Lambda Extensions.