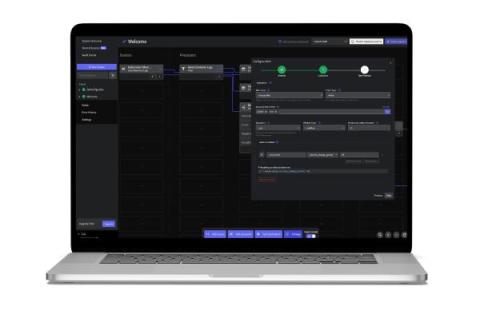

Telemetry Data Compliance Module

Telemetry data sent from applications often contains Personally Identifying Information (PII) like names, user IDs, phone numbers, and other information that must be obfuscated before the data is sent to storage or observability tools, in order to be in compliance with corporate or government policies such as HIPAA in the US or the GDPR in the EU.