Data Is a Blizzard: Just Because Each Snowflake Is Unique Doesn't Mean Your Search Tools Have to Be Too

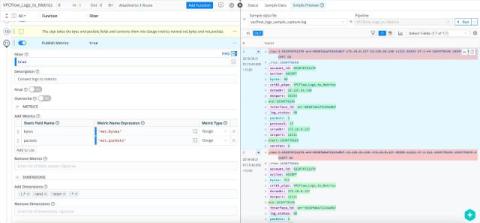

Cribl Search is agnostic, allowing administrators to now query Snowflake datasets as they can dozens of other Lakes, Stores, Systems & Platforms. The data that IT and security teams rely on to monitor network operations continues to grow at a 28% CAGR, and it’s stressing many organizations’ ability to analyze all this data effectively. In fact, in some cases, less than 2% of it ever gets looked at.