HoneyByte: Using Application Metrics With Prometheus Clients

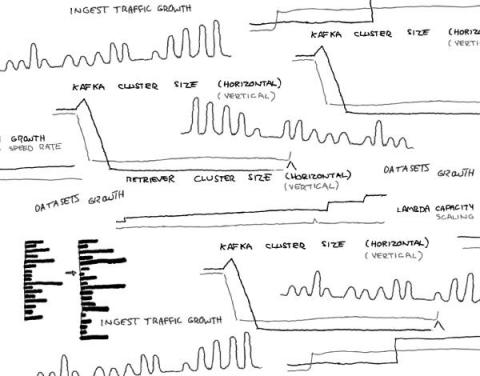

Have you ever deep dived into the sea of your tracing data, but wanted additional context around your underlying system? For instance, it may be easy to see when/where certain users are experiencing latency, but what if you needed to know what garbage collection is mucking up the place or which allocated memory is taking a beating? Imagine having a complete visual on how an application is performing when you need it, without having to manually dig through logs and multiple UI screens.