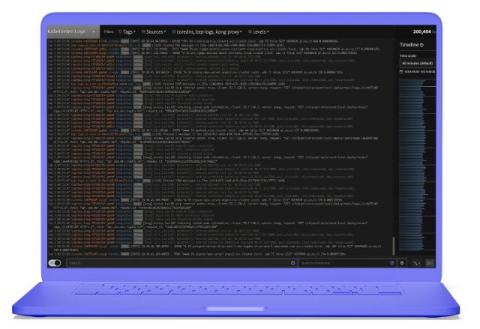

More Value From Your Logs: Next Generation Log Management from Mezmo

Once upon a time, we thought “Log everything” was the way to go to ensure we have all the data we needed to identify, troubleshoot, and debug issues. But we soon had new problems: cost, noisiness, and time spent sifting through all that log data. Enter log analysis tools to help refine volumes of log data and differentiate signal from the noise to reduce mental toil to process. Log beast tamed, for now….