Developing with OpenAI and Observability

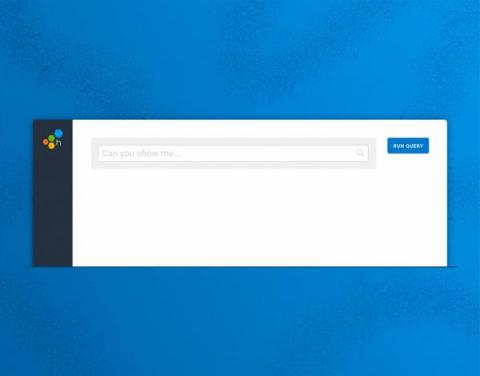

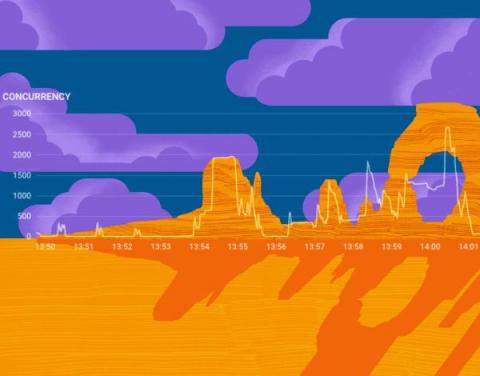

Honeycomb recently released our Query Assistant, which uses ChatGPT behind the scenes to build queries based on your natural language question. It's pretty cool. While developing this feature, our team (including Tanya Romankova and Craig Atkinson) built tracing in from the start, and used it to get the feature working smoothly. Here's an example. This trace shows a Query Assistant call that took 14 seconds. Is ChatGPT that slow? Our traces can tell us!