So We Shipped an AI Product. Did it Work?

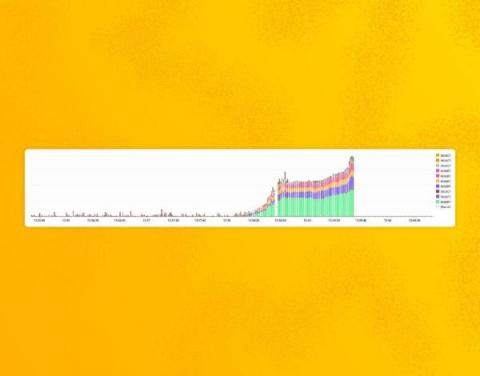

Like many companies, earlier this year we saw an opportunity with LLMs and quickly (but thoughtfully) started building a capability. About a month later, we released Query Assistant to all customers as an experimental feature. We then iterated on it, using data from production to inform a multitude of additional enhancements, and ultimately took Query Assistant out of experimentation and turned it into a core product offering.