Operations | Monitoring | ITSM | DevOps | Cloud

Appfleet

Understanding Amazon Elastic Container Service for Kubernetes (EKS)

Amazon Elastic Container Service for Kubernetes or EKS provides a Managed Kubernetes Service. Amazon does the undifferentiated heavy lifting, such as provisioning the cluster, performing upgrades and patching. Although it is compatible with existing plugins and tooling, EKS is not a proprietary AWS fork of Kubernetes in any way. This means you can easily migrate any standard Kubernetes application to EKS without any changes to your code base.

Autoscaling an Amazon Elastic Kubernetes Service cluster

In this article we are going to consider the two most common methods for Autoscaling in EKS cluster: The Horizontal Pod Autoscaler or HPA is a Kubernetes component that automatically scales your service based on metrics such as CPU utilization or others, as defined through the Kubernetes metric server. The HPA scales the pods in either a deployment or replica set, and is implemented as a Kubernetes API resource and a controller.

Cloud-native benchmarking with Kubestone

Organizations are increasingly looking to containers and distributed applications to provide the agility and scalability needed to satisfy their clients. While doing so, modern enterprises also need the ability to benchmark their application and be aware of certain metrics in relation to their infrastructure. In this post, I am introducing you to a cloud-native bench-marking tool known as Kubestone.

Enabling multicloud K8s communication with Skupper

There are many challenges that engineering teams face when attempting to incorporate a multi-cloud approach into their infrastructure goals. Kubernetes does a good job of addressing some of these issues, but managing the communication of clusters that span multiple cloud providers in multiple regions can become a daunting task for teams. Often this requires complex VPNs and special firewall rules to multi-cloud cluster communication.

Optimize Ghost Blog Performance Including Rewriting Image Domains to a CDN

The Ghost blogging platform offers a lean and minimalist experience. And that's why we love it. But unfortunately sometimes, it can be too lean for our requirements. Web performance has become more important and relevant than ever, especially since Google started including it as a parameter in its SEO rankings. We make sure to optimize our websites as much as possible, offering the best possible user experience.

Local Kubernetes testing with KIND

If you've spent days (or even weeks?) trying to spin up a Kubernetes cluster for learning purposes or to test your application, then your worries are over. Spawned from a Kubernetes Special Interest Group, KIND is a tool that provisions a Kubernetes cluster running IN Docker.

Introduction to KUDO: Automate Day-2 Operations (II)

In a previous article, we discussed KUDO and the benefits of it when you want to create or manage Operators. In this article we will focus on how to start to work with KUDO: Installation, using a predefined Operator and create your own one. Installing KUDO To install KUDO the first step is to install the CLI plugin in order to manage KUDO via CLI. Depending on your OS you can use a package manager like Brew or Krew, however installing the binary is a straightforward option to proceed.

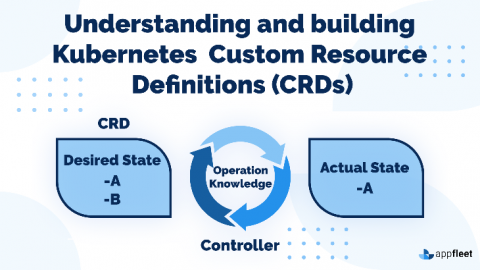

Understanding and building Kubernetes Custom Resource Definitions (CRDs)

So, let's say you had a service or application that was built on an orchestration platform such as Kubernetes. In doing so, you must also address an overflowing array of architectural issues, including security, multi-tenancy, API gateways, CLI, configuration management, and logging. Wouldn't you like to save some manpower and development time and focus on creating something unique to your problem? Well, it just so happens that your solution lies in what's called a Custom Resource Definition, or CRD.

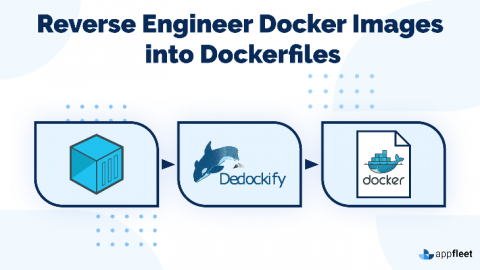

Reverse Engineer Docker Images into Dockerfiles

This article explores how we can reverse engineer Docker images by examining the internals of how Docker images store data, how to use tools to examine the different aspects of the image, and how we can create tools like Dedockify to leverage the Python Docker API to create Dockerfiles from source images.