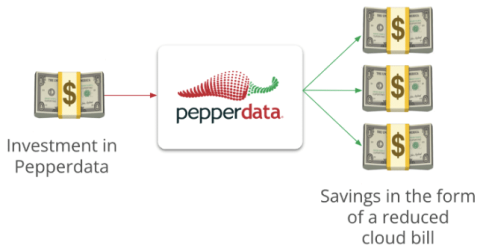

Pepperdata "Sounds Too Good to Be True"

"How can there be an extra 30% overhead in applications like Apache Spark that other optimization solutions can't touch?" That's the question that many Pepperdata prospects and customers ask us. They're surprised—if not downright mind-boggled—to discover that Pepperdata autonomous cost optimization eliminates up to 30% (or more) wasted capacity inside Spark applications.