Operations | Monitoring | ITSM | DevOps | Cloud

CircleCI

Accessibility testing with Cypress

Effective user experience (UX) design is a key factor in creating compelling software products. UX considers the quality of interaction that users have with a product and takes the user’s point of view as the most sacred thing in software and product design. A great UX includes accessibility, which ensures that software is inclusive and usable by the widest possible audience.

Manage Kubernetes environments with GitOps and dynamic config

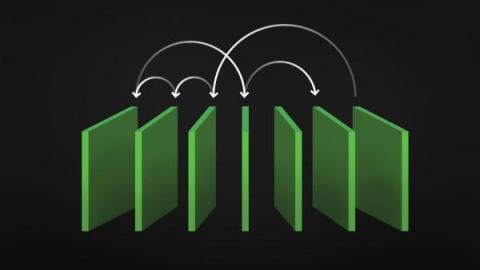

Most modern infrastructure architectures are complex to deploy, involving many parts. Despite the benefits of automation, many teams still chose to configure their architecture manually, carried out by a deployment expert or, in some cases, teams of deployment engineers. Manual configurations open up the door for human error. While DevOps is very useful in developing and deploying software, using Git combined with CI/CD is useful beyond the world of software engineering.

Argo Rollouts at CircleCI: Progressive deployment for agile and efficient releases

At Circle, our traditional approach to Kubernetes (k8s) deployments likely looks familiar to many of you: Run the workflow, create the image, build the Helm chart and deliver it to k8s. At that point, k8s takes over with its rolling update. This method gets the job done, but we knew it wasn’t ideal. Limited support for canary releases and the need for time-consuming error monitoring and manual rollbacks added friction and risk to our release processes.

Helm deployments to a Kubernetes cluster with CI/CD

Containers and microservices have revolutionized the way applications are deployed on the cloud. Since its launch in 2014, Kubernetes has become a de-facto standard as a container orchestration tool. Helm is a package manager for Kubernetes that makes it easy to install and manage applications on your Kubernetes cluster. One of the benefits of using Helm is that it allows you to package all of the components required to run an application into a single, versioned artifact called a Helm chart.

Progressive delivery on Kubernetes with CircleCI and Argo Rollouts

Containers and microservices have revolutionized the way applications are deployed on the cloud. Since its launch in 2014, Kubernetes has become a de-facto standard as a container orchestration tool. With traditional approaches of deploying applications in production, developers often release updates or new features all at once, which can lead to issues if there are bugs or other issues that weren’t caught during testing.

Feature flags for stress-free continuous deployment

Feature flags (also known as feature toggles or switches) are conditional statements in code that determine whether a feature or functionality is visible and accessible to users of an application or service. They offer programmers a powerful tool for managing feature releases. Their capabilities are indispensable in software development, where agility and continuous, automated delivery are paramount.

DevOps language trends 2023: Top tools used by elite software delivery teams

As organizations continue to embrace CI/CD and DevOps in their quest for shorter, more reliable delivery cycles, the choice of programming languages becomes even more critical. The language used to build your applications can affect everything from developer happiness and productivity to your organization’s performance on the four key software delivery metrics.

How to overcome the challenges of ML model development

CD for machine learning: Deploy, monitor, retrain

While there are an increasing number of off-the-shelf machine learning (ML) solutions that promise to adapt to your specific requirements, organizations that are serious about investing in ML for the long term are building their own workflows tailored exactly to their data and the outcomes they expect. To make full use of this investment, ML models must be kept up to date and working from the freshest available data.