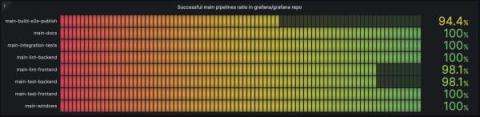

How we reduced flaky tests using Grafana, Prometheus, Grafana Loki, and Drone CI

Flaky tests are a problem that are found in almost every codebase. By definition, a flaky test is a test that both succeeds and fails without any changes to the code. For example, a flaky test may pass when someone runs it locally, but then fails on continuous integration (CI). Another example is that a flaky test may pass on CI, but when someone pushes a commit that hasn’t touched anything related to the flaky test, the test then fails.