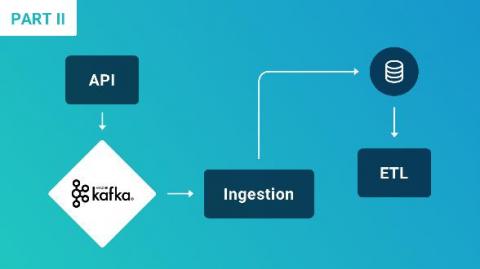

Apache Kafka Example: How Rollbar Removed Technical Debt - Part 2

April 7th, 2020 • By Jon de Andrés Frías In the first part of our series of blog posts on how we remove technical debt using Apache Kafka at Rollbar, we covered some important topics such as: In the second part of the series, we’ll give an overview of how our Kafka consumer works, how we monitor it, and which deployment and release process we followed so we could replace an old system without any downtime.